Evolving and Sustaining Ocean Best Practices and Standards for the Next Decade

- 1Institute of Electrical and Electronics Engineers, Paris, France

- 2U.S. IOOS QARTOD Project, Virginia Beach, VA, United States

- 3Sorbonne Universiteì, Centre National de la Recherche Scientifique, Laboratoire d’Oceìanographie de Villefranche, Villefranche-sur-Mer, France

- 4GEOMAR, Helmholtz Centre for Ocean Research Kiel, Kiel, Germany

- 5HGF-MPG Group for Deep Sea Ecology and Technology, Alfred-Wegener-Institut, Helmholtz-Zentrum für Polar- und Meeresforschung, Bremerhaven, Germany

- 6Central Caribbean Marine Institute, Little Cayman, Cayman Islands

- 7Institute for Science and Ethics, Nice, France

- 8College of Marine Science, University of South Florida, St. Petersburg, FL, United States

- 9Data Centre Facility, Balearic Islands Coastal Observing and Forecasting System, Palma de Mallorca, Spain

- 10UNESCO/IOC Project Office for IODE, IOC Capacity Development, Intergovernmental Oceanographic Commission, Oostende, Belgium

- 11Woods Hole Oceanographic Institution, Woods Hole, MA, United States

- 12South African Environmental Observation Network, Cape Town, South Africa

- 13Global Ocean Observing System, United Nations Educational, Scientific and Cultural Organization, Paris, France

- 14Data Stewardship and Operations Support, Ocean Networks Canada, Victoria, BC, Canada

- 15Oceanography Section, Istituto Nazionale di Oceanografia e di Geofisica Sperimentale, Trieste, Italy

- 16Department for Physical Oceanography and Instrumentation, Leibniz Institute for Baltic Sea Research Warnemünde, Rostock, Germany

- 17Institut Français de Recherche pour l’Exploitation de la Mer, Brest, France

- 18National Centers for Environmental Information, National Oceanic and Atmospheric Administration, Stennis Space Center, MS, United States

- 19US IMAGO, Institut de Recherche pour le Développement, Brest, France

- 20Institute for the Study of Anthropogenic Impacts and Sustainability of the Marine Environment, National Research Council of Italy, Genoa, Italy

- 21National Oceanography Centre, Liverpool, United Kingdom

- 22Pacific Marine Environmental Laboratory, National Oceanic and Atmospheric Administration, Seattle, WA, United States

- 23Institute of Oceanography, Madrid, Spain

- 24PLOCAN, Oceanic Platform of the Canary Islands, Telde, Spain

- 25Department of Ocean Standardization Management, National Center of Ocean Standards and Metrology, Tianjin, China

- 26Marine Institute, Galway, Ireland

- 27IMT Atlantique, Brest, France

- 28National Institute of Geophysics and Volcanology, Rome, Italy

- 29National Oceanography Centre, Natural Environment Research Council–University of Southampton, Southampton, United Kingdom

- 30Ocean Systems Test and Evaluation Program, Center for Operational Oceanographic Products and Services, National Ocean Service, National Oceanic and Atmospheric Administration, Chesapeake, VA, United States

- 3152°North GmbH, Münster, Germany

- 32Integrated Marine Observing System, University of Tasmania, Hobart, TAS, Australia

- 33ETT SpA, Genoa, Italy

- 34Centre for Ecological Research and Forestry Applications, Autonomous University of Barcelona, Barcelona, Spain

- 35University Corporation for Atmospheric Research, Boulder, CO, United States

- 36Hellenic Centre for Marine Research, Heraklion, Greece

- 37Laboratori R. Sartori, University of Bologna, Ravenna, Italy

- 38National Earth and Marine Observation Branch, Geoscience Australia, Canberra, ACT, Australia

- 39Geophysical Institute–Bjerknes Centre for Climate Research, University of Bergen, Bergen, Norway

- 40Alliance for Coastal Technologies, Chesapeake Biological Laboratory, University of Maryland, Solomons, MD, United States

- 41Institute of Oceanology, Polish Academy of Sciences, Sopot, Poland

- 42Sorbonne Universités (UPMC Université Pierre et Marie Curie, Paris 06)-CNRS-IRD-MNHN, UMR 7159, Laboratoire d’Océanographie et de Climatologie (LOCEAN), Institut Pierre-Simon Laplace (IPSL), Paris, France

- 43Scripps Institution of Oceanography, University of California, San Diego, La Jolla, CA, United States

- 44Center for Marine Environmental Sciences, University of Bremen, Bremen, Germany

- 45Ocean Tracking Network, Dalhousie University, Halifax, NS, Canada

The oceans play a key role in global issues such as climate change, food security, and human health. Given their vast dimensions and internal complexity, efficient monitoring and predicting of the planet’s ocean must be a collaborative effort of both regional and global scale. A first and foremost requirement for such collaborative ocean observing is the need to follow well-defined and reproducible methods across activities: from strategies for structuring observing systems, sensor deployment and usage, and the generation of data and information products, to ethical and governance aspects when executing ocean observing. To meet the urgent, planet-wide challenges we face, methods across all aspects of ocean observing should be broadly adopted by the ocean community and, where appropriate, should evolve into “Ocean Best Practices.” While many groups have created best practices, they are scattered across the Web or buried in local repositories and many have yet to be digitized. To reduce this fragmentation, we introduce a new open access, permanent, digital repository of best practices documentation (oceanbestpractices.org) that is part of the Ocean Best Practices System (OBPS). The new OBPS provides an opportunity space for the centralized and coordinated improvement of ocean observing methods. The OBPS repository employs user-friendly software to significantly improve discovery and access to methods. The software includes advanced semantic technologies for search capabilities to enhance repository operations. In addition to the repository, the OBPS also includes a peer reviewed journal research topic, a forum for community discussion and a training activity for use of best practices. Together, these components serve to realize a core objective of the OBPS, which is to enable the ocean community to create superior methods for every activity in ocean observing from research to operations to applications that are agreed upon and broadly adopted across communities. Using selected ocean observing examples, we show how the OBPS supports this objective. This paper lays out a future vision of ocean best practices and how OBPS will contribute to improving ocean observing in the decade to come.

Introduction

The Ocean Observing Challenge

The oceans play a key role in global issues such as climate change, food security, sustainable consumption and production and human health. The oceans are enormous, transcending human-defined ocean boundaries, and they are continuous and uninterrupted in time and space. The well-being of humanity is tightly linked to the oceans and the seas as repeatedly stated in multiple policy frameworks including the United Nations (UN) Sustainable Development Goals (SDG) (Wackernagel et al., 2017; UNSDG, 2018) and the European Union’s (EU’s) Marine Strategy Framework Directive1.

The vast dimensions and internal complexity of the oceans and the seas make observing and monitoring challenging, particularly in remote and hostile environments. Therefore, observing activities require careful structuring for improving their efficiency, coherence, and coverage. Ocean observing experts have long recognized these challenges (Olson, 1988; Kulkarni, 2015) as well as the need to address them transnationally. A first and foremost requirement for collaboration in ocean observing is the need to follow well-defined methods. In this paper, “Ocean Best Practices” include all aspects of ocean observing from research to operations to products that benefit from proper and agreed upon documented methods. Ocean best practices are an essential component of the growing operational oceanographic services that provide ocean forecasts at multiple time and space scales.

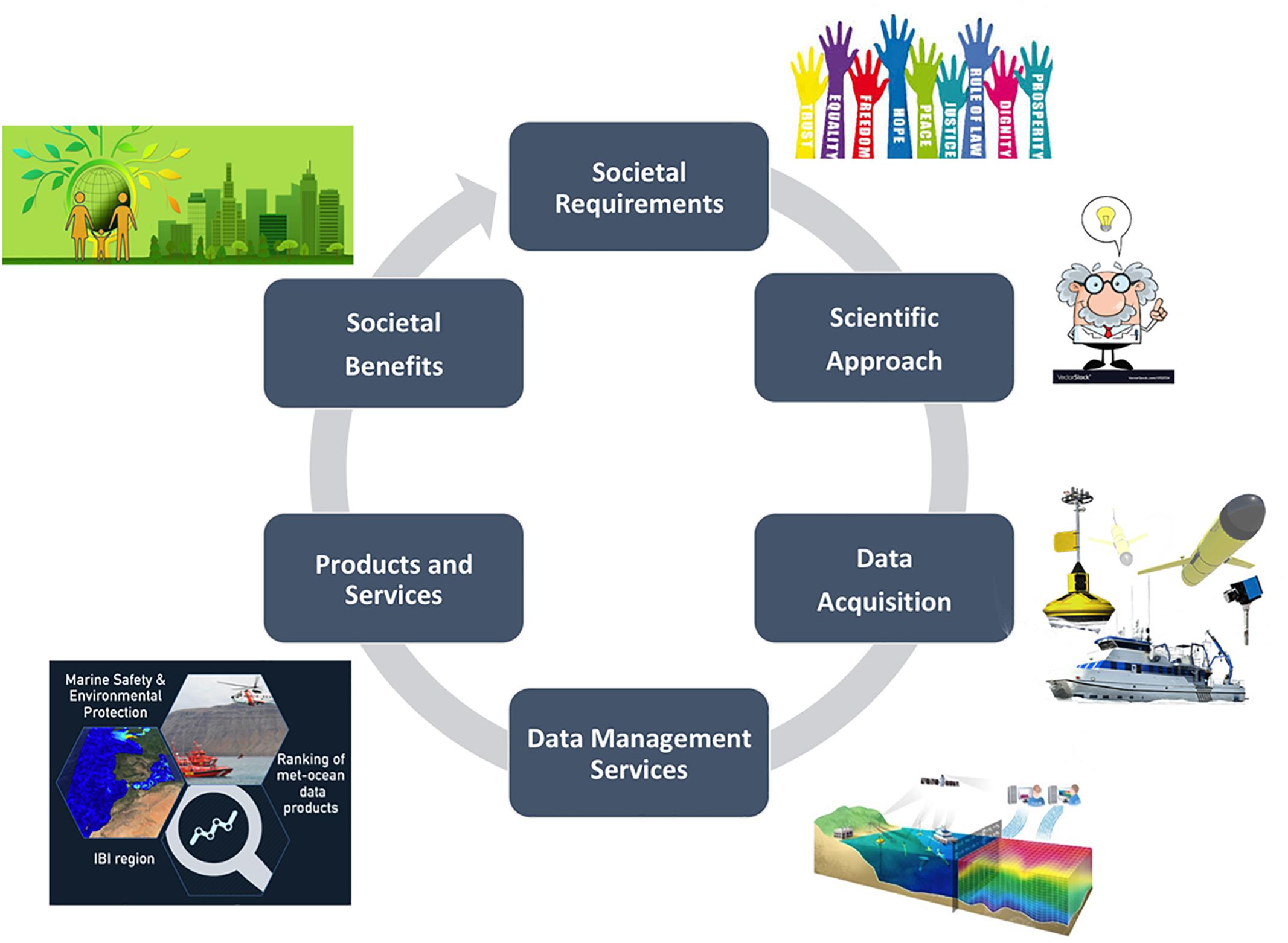

In a generic sense, “ocean observing” can be summarized by a chain of processes addressing “why to observe?” (requirement setting process), “what to observe?” (scoping of observational foci), “how to observe?” (coordination of observing elements), and “how to integrate, use and disseminate observational outcomes and understand their impacts?” (data management, analyses and creation and assessment of information products). This chain may be executed for a single scientific project that aims to formulate or refine a hypothesis, or by an environmental agency delivering operational products (e.g., warning the public about a hazardous event). These aspects make clear that “ocean observing” is more than just taking observations. Only by considering a need for observing and by making sure that information (including observations) can be merged into a product/outcome is the act of ocean observing complete and meaningful.

Motivated by the 2009 OceanObs’09 conference2 in Venice, Italy, the “Framework of Ocean Observing” (FOO) (Lindstrom et al., 2012) was documented as a structural approach for maintaining a sustained Global Ocean Observing System (GOOS). The FOO introduces two important concepts: (1) a system engineering approach to ocean observing; and (2) Essential Ocean Variables (EOVs). For the first, the result has been an increased awareness of the observing system value chain and the importance of the operating sequence of processes from societal and scientific requirements via observations to end-user products (see Figure 1). A value chain, which is a term adopted from economics, is broadly defined as a set of value-adding activities that one or more communities perform in creating and distributing goods and services (Longhorn and Blakemore, 2008). Evaluating the value chain for a system reveals not only its structure, but allows identifying and optimizing the processes operating within the system (Porter, 1985). For the second concept, the EOVs (GOOS, 2018)3, following the Essential Climate Variables model (Bojinski et al., 2014), were introduced in the FOO to prioritize parameters to observe, based on feasibility and societal and science impact. The feasibility is gauged, in part, by the observing system’s component maturity levels (called Technology Readiness Levels (TRL) and discussed in section “Approaches to Meet OBPS Requirements”). The “societal impacts” analyzed in the FOO ultimately take into account the legal and social dimensions of ocean observing (see, for example, Miloslavich et al., 2018a). This includes the challenges of cooperation, trust, ethics and others that also must be addressed if the ocean information chain is to be effective for society (Barber, 1987).

Introduction to Best Practices and Standards

Best practices and standards are the two most common dimensions present in broadly accepted methodologies and serve to ensure consistency in achieving a superior product or end state. As many readers may not be familiar with standards and their formation nor have direct experience with best practices, a short introduction is included here. Best practices and standards are part of a continuum of community agreements (Pulsifer et al., 2019). Best practices, in the way the term is used in this paper, are descriptions of methods, generally originated bottom-up by individual organizations. Best practices can become standards if established by panels in standards organizations or equivalent. This section will look at both standards and best practices, focusing first on best practices.

A best practice is a methodology that has repeatedly produced superior results relative to other methodologies with the same objective; to be fully elevated to a best practice, a promising method will have been adopted and employed by multiple organizations (Simpson et al., 2018). This definition is similar to definitions used in other fields for best practices (Bretschneider et al., 2005). Best practices can come in many forms such as “standard operating procedures,” manuals or guides. However, they all have a common goal of improving the quality and consistency of processes, measurements, data and applications through agreed practices.

The diversity within our ocean community, and the continuous and asynchronous evolution of technology and capacity means that there can be several “best” approaches actively used that have not been universally evaluated across the observing community (see section “The Issues for Ocean Best Practices”). Indeed, arriving at a more universal set of best practices may entail going through “commonly accepted and well documented” practices, which must be then compared and synthesized (if possible).

Standards have the same objectives as best practices, but the difference is that they may serve as benchmarks for evaluation in addition to being processes. Also, they are generally top-down and may become mandatory legislated standards, such as the European INSPIRE legislation4. The International Standards Organization (ISO) defines standards as “documents of requirements, specifications, guidelines or characteristics that can be used consistently to ensure that materials, products, processes and services are fit for their purpose.” The time for the formation of a standard by a Standards Development Organization (SDO) is 3–5 years or more using formal working groups to write the standard.

The top-down approach as used in standards development can be useful to achieve a long-term, stable consensus in interfaces and certain underlying processes. There are numerous SDOs creating global, open technology standards. These include, for example, the ISO, the IEEE Standards Association (IEEE-SA) and the Open Geospatial Consortium (OGC). OpenStandards.net provides an overview of SDOs5. SDOs adhere to equitable methodologies for standards development, but each can have different operating practices. For example, ISO is a global network of the foremost standards organizations of participating countries; there is only one member per country.6 On the other hand, IEEE-SA and the OGC are standards organizations that include a broad representation of industry, other organizations and individuals. The latter also have more flexibility to streamline the standards development process.

Both IEEE-SA and ISO recognize the benefits of having “recommended practices” (IEEE-SA) or “technical specifications” (ISO) – documents that address work still under technical development. Within ocean observing, there is a necessity for both standards and best practices. Generally, the bottom-up approach of ocean observing and lack of a central mandating authority such as the World Meteorological Organization (WMO) is consistent with creation and use of best practices. However, within the marine community, there is extensive use of standards. A good example is the IEEE 802.11 standard for wireless modems. For ocean observing, OGC has the Sensor Observation Service (SOS) standard to improve interoperability of sensor data management, which allows querying observations, sensor metadata, as well as representations of observed features.7

The Ocean Best Practices System (OBPS) and Its Implementation

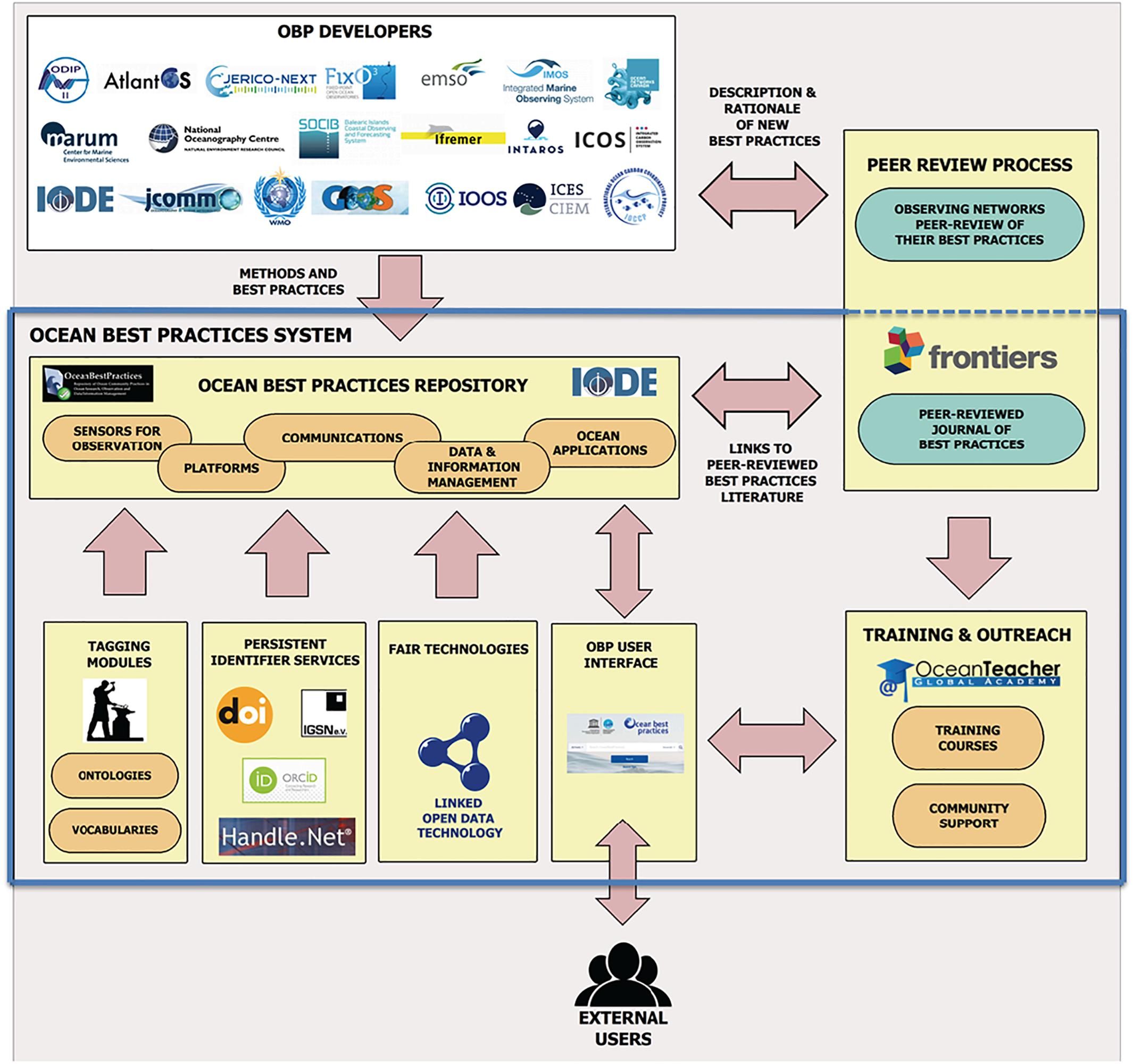

The OBPS is a new system comprising technological solutions and community approaches to enhance management of methods as well as support the development of ocean best practices. The OBPS includes a persistent document repository with enhanced discovery and access capabilities, a peer-reviewed journal research topic, and training approaches leveraging social media and the use of OceanTeacher Global Academy (OTGA).

The Issues for Ocean Best Practices

Ocean best practices face similar challenges to those in any other discipline – limited awareness of existing practices, lack of widespread distribution of practices, missing incentives that drive community building and lack of a centralized resource for submitting and accessing best practices.

Further, requirements on ocean observing may vary depending on mission and the ocean environment, e.g., ocean best practices for the Arctic may not be applicable to the Tropics while those that excel in coastal area may have little value in the deep ocean. A targeted measurement precision, and thus the recommended best practice, is different if one seeks to observe small climate signals in the deep ocean or monitor near real time observations in the coastal area. The variability in requirements can be so profound that they inhibit collaboration between communities. Likewise, variability of human and technology capabilities across institutions or countries may prevent global application of best practices. Documentation of best practices may also differ across sectors such as research institutions and private sector organizations. Sensor and platform manufacturers have competitive pressures to provide documentation on their products, but not necessarily with the level of detail needed for creating best practices. There is also a resistance to adapt to the latest best practices that may lead to an increase in sensor or platform non-recurring engineering costs.

Another issue for broad adoption of best practices is that they have often been passed along through direct training and through oral traditions, in lieu of written and openly distributed documentation. Additionally, in the academic community, publication of methods is not as highly regarded as publication of original research. With publication in a scientific journal, wide distribution of best practices is still not assured because many journals are behind subscription barriers despite the growing mandate for open access publishing models.

Even when methods are documented, the review process across communities is not consistent. One way to overcome this shortcoming is through a community-wide acceptance of the need for ocean best practices peer review in line with peer review of science results. Large networks, such as Integrated Ocean Observing System (IOOS) in the United States (U.S. Integrated Ocean Observing System, 2014) and the Australian Integrated Marine Observing System (IMOS) have historically been able to motivate and support peer review internally when it is an organizational priority. Smaller institutions may not have the capability because they do not have access to a wide community of readily motivated independent experts.

An alternative to the bottom-up peer review is to have an organization with a top-down coordination mandate. This been successfully applied in the field of atmospheric observations for Numerical Weather Predictions (NWP), under the auspice of the WMO. Weather observation and forecasting have a widely understood benefit for society, which is accepted and supported by governments. Atmospheric observations for NWP are coordinated through governmental agencies and based on globally agreed upon methods encoded in WMO standards. For oceans, such top-down approaches occur where a direct societal application is linked to observing, as for example, in ecosystem management for fisheries though the intergovernmental marine science organization ICES.

For other oceanic cases, as the GOOS that currently operates on rather loosely defined observing objectives and based on voluntary activities, such a structured and globally agreed mandate process does not exist. Nevertheless, ocean observing networks, which operate under the GOOS umbrella, may have created community agreed best practices documentation. GO-SHIP (Hood et al., 2010; Talley et al., 2016) has created well-defined standard operating procedures, which are propagated throughout the project. This is true, but to a lesser extent, with Argo (Roemmich et al., 2009) and OceanSITES (Send et al., 2010). Smaller networks without the breadth of resources and participation have a harder time creating broadly reviewed practices.

In summary, there are many challenges in creating and adopting practices at a regional and global scale. These include both technical and human factors that need to be addressed by the OBPS.

Introduction to OBPS and Description

The Ocean Best Practice System (OBPS) provides a foundation upon which the ocean community can more systematically develop and use best practices (Pearlman et al., 2017). The OBPS is centered on a core vision and mission (Simpson et al., 2018). It envisions having “agreed and broadly adopted methods for every activity in ocean observing from research to operations to applications.” The mission statement that corresponds to the vision is “to provide coordinated, sustained global access to methodologies/best practices across ocean sciences to foster collaboration and innovation.” The OBPS that was created to support this vision and mission is shown in Figure 2. At the heart of the system, the OBPS repository derives from expanding the scope of the OceanDataPractices Repository initiated in 2014 (Simpson, 2015). The repository software provides a secure home for the collection of documents stored in the system. Repository content can be annotated through defined metadata fields, enhancing structured archiving and retrieval. Further, submitters are encouraged to use document templates to improve consistency of the document formats and enable improved tools to be developed8. The repository’s content is indexed by all the major search engines and harvested by such services as Google Scholar, Scopus, OpenAIRE, ASFA, etc. To support such indexing, the repository assigns DOI to submitted best practices9. If documents already have DOIs assigned to them by an external system, their existing identifiers are added to the system and are used as the preferred DOI within the OBPS.

Figure 2. OBPS structure (large box across middle of figure), core technologies (along the bottom of the box), and best practice provider organizations (in the box at top). Training is shown at the bottom right.

Peer review of documents stored in the OBPS repository is supported through a partnership with the Frontiers of Marine Science. There are many forms of peer review (Walker and Rocha da Silva, 2015) and the Frontiers in Marine Science provides an open access platform and identifies reviewers for accepted articles. This was judged to be an important part of the community forum process desired for OBPS in that it would help build consensus around what is indeed “best” in different settings. However, peer review will not always resolve issues among competing practices, or determine at what point a new practice should replace an existing one.

In addition to the peer review offered by academic journals, the review of submissions by expert panels from programs like GOOS10, the Joint Technical Commission for Oceanography and Marine Meteorology (JCOMM)11, the International Oceanographic Data and Information Exchange (IODE)12, and others, will help identify recommended practices for respective stakeholders. GOOS has identifed EOVs as a good starting points to develop and demonstrate an expert panel process, as the EOVs support current and pressing needs for global observation. Based on the experience in implementing this process for the GOOS EOVs the precedents can be refined for use by other groups.

An archive of best practices would be of little use if it did not support training of ocean observers and users. For the training component, OBPS leverages the OTGA. This addresses all levels of professional development with the objective to support young professionals and also those who are entering or working across disciplines. With the continuing advancement of personal communication technologies and social media, there are many more opportunities to augment traditional classroom training.

To understand the details of the OBPS implementation, the system requirements guiding the design will be reviewed first.

Requirements for the Ocean Best Practices System

The long-term requirement for the OBPS came from the ocean observation community through a series of workshops and town hall meetings. Foremost among these requirements is to serve as a global focal point for ocean best practices. The more detailed requirements include:

(1) System infrastructure requirements:

• Operations should be sustainably supported.

• Open architecture should provide adaptability to future developments; mechanisms should be available to update components and procedures on a timely basis as experience grows and new technologies/methods come on-line.

• Data, information, and software hosted by the system should be open source and guided by the FAIR principles for making data and best practices more findable, accessible, interoperable and reusable (Wilkinson et al., 2016). Openness and transparency should be embedded in the design and operation of the system.

• Intellectual property of providers should be respected; depositing content into an open access OBPS repository on a non-exclusive basis allows the provider to retain ownership (copyright) which preferably should be indicated by a Creative Commons License13, with the use permissions attached to the document.

• Peer review should be facilitated and encouraged.

• Training and user needs should be supported for developed and developing countries.

(2) Requirements set by system users (best practice providers and best practice end users):

• The repository should be populated with a sufficient number of best practices entries for users to want to use the system.

• Users should be able to discover and more effectively compare practices.

• The search, augmented by semantic technology, must be intuitive and easily done by non-experts.

• Maturity levels of best practices should be identified based on consistent, reproducible processes.

• The level of effort needed to submit documents to the system should be minimized and, where possible, automated metadata and content ingestion using a template should be available.

• Unique identifiers should be assigned to all practices including updated versions.

• Expanded metadata should include indicators for EOVs and SDGs, as well as the maturity of the practice.

• Access statistics/metrics for a practice should be available to providers and users.

By implementing these, the primary functionalities of the OBPS provide for: (1) contributions (both by a provider and from web inputs); (2) use (including discovery and access and comparisons of documented practices); (3) review (completeness, peer review, and updating); (4) training; (5) unique identification of documents and authorship; and (6) metrics for impact and visibility of uptake.

Approaches to Meet OBPS Requirements

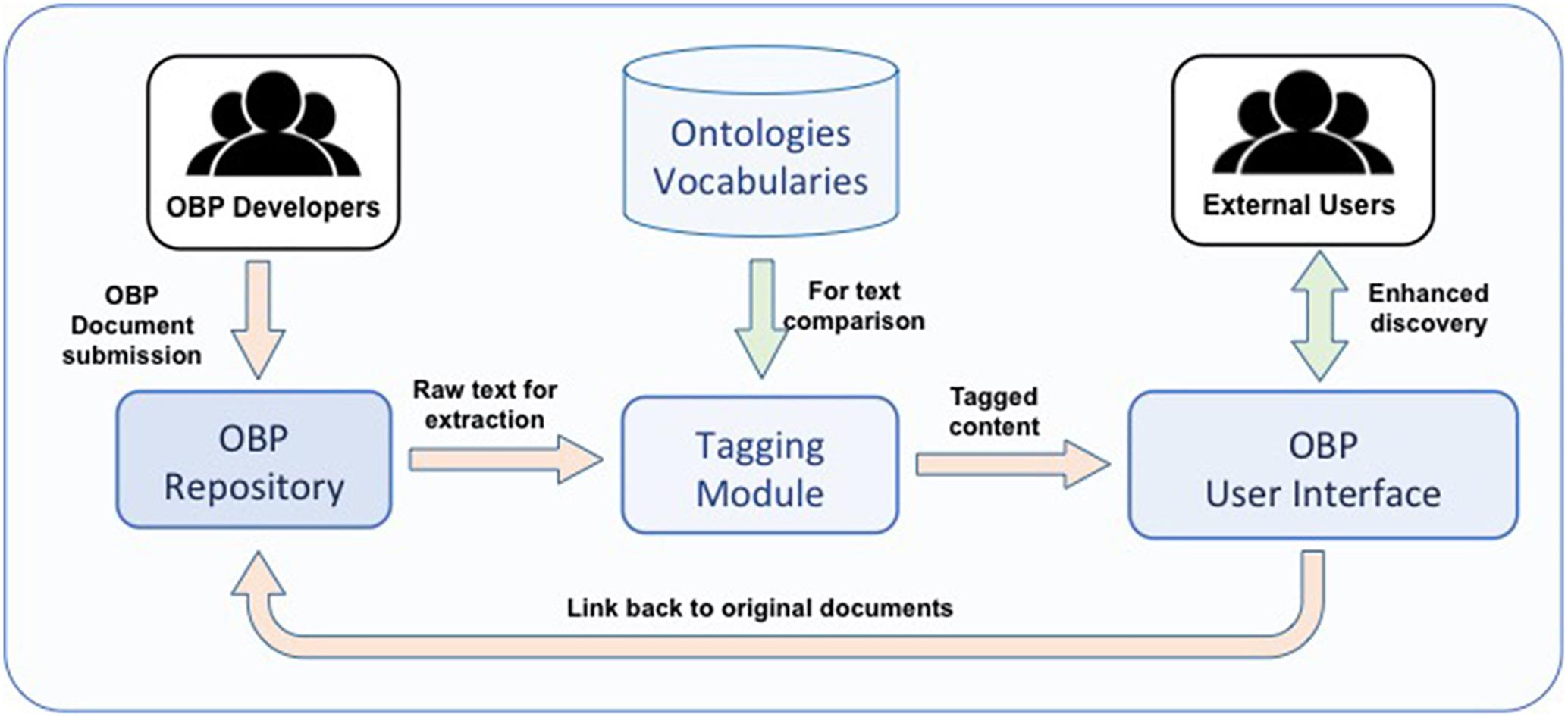

Advanced technology has been incorporated into the repository to improve coherent discovery of documents with diverse formats. Search and indexing capabilities were extended into the text within each document to tag user identified words via text mining and natural language processing techniques (Figure 3). The OBPS Search Interface14 presents a user-friendly portal to make use of both the indexing and the terminological tags generated by the system, offering enhanced access through FAIR-aligned interoperability solutions.

Figure 3. Tagging documents using marine related ontologies enhances best practice discovery. Documents are submitted to the repository and text in the document is sent to the tagging module, which identifies tagged words by comparing with marine and other ontologies and then transmits the results for access by users.

From an IT perspective, the tagging capability supported by FAIR-aligned terminology resources is an innovation that has a two-way effect. On the one hand, the reference terminologies (Buttigieg P.L. et al., 2016) help connect the content of best practice/method description documents with widely-used, machine-readable descriptors commonly applied to data. On the other hand, the text-mined content of the OBPS collection can serve to identify and fill gaps in the terminologies. Through the OBPS, there is a two-way benefit in both (1) vocabularies such as the widely adopted British Oceanographic Data Centre vocabularies15 and (2) ontology resources covering a broad range of disciplines such as environments (Buttigieg P. et al., 2016), populations and communities (Walls et al., 2014), devices and protocols (Brinkman et al., 2010), chemicals (Degtyarenko et al., 2008), qualities (Mabee et al., 2007), and the SDGs (Buttigieg P.L. et al., 2016), all developed using the best practices of the Open Biological and Biomedical Ontologies (OBO) Foundry and Library (Smith et al., 2007). The feedback process has already seeded new research initiatives in ocean-oriented artificial intelligence, aiming to build stronger links between the vocabularies, thesauri, and ontologies deployed in the OBPS. These developments are a means to prepare the way for future integration and extended discoverability as new ocean observing capabilities emerge.

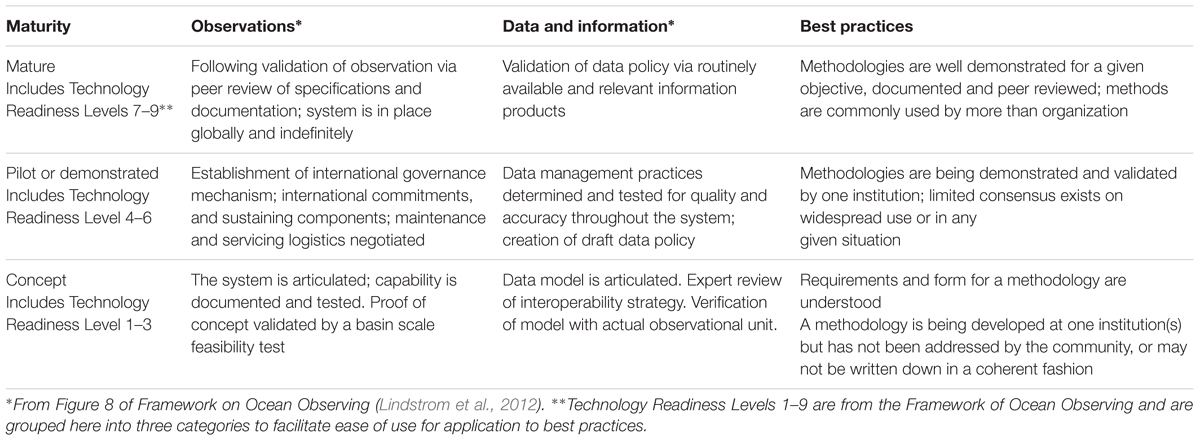

In addition to the new search capabilities, other features support scientists working out of their specialty or students looking for best practices and standard methods. Users new to ocean observing will want to know, for example, the maturity of a best practice. One maturity assessment tool is TRL which was a scheme developed in the 1970s by NASA (Heder, 2017). The number of TRL levels that are useful varies with the application (Olechowski et al., 2015). NASA uses nine levels. The FOO uses both a nine level and a reduced scale with three levels: concept demonstration (immature), demonstration (pilot) and operational capability (mature) (also used in GOOS, 2018)16. Similarly, current best practices vary from “mature” monitoring of certain physical parameters (e.g., temperature), and less mature methodologies (e.g., zooplankton biomass and diversity). Depending on the system or process element, the definitions of mature and immature are not always easy to articulate consistently (Ferguson et al., 2018). OBPS will use the following definitions that have been adapted from the FOO to address best practices:

• Mature: Methodologies are well demonstrated for a given objective, documented and peer reviewed; methods are commonly used by more than one organization.

• Pilot or Demonstrated: Methodologies are being demonstrated and validated; limited consensus exists on widespread use or in any given situation.

• Concept: A methodology is being developed at one institution(s) but has not been agreed to by the community; requirements and form for a methodology are understood.

These definitions have been included in Table 1. Assignment of maturity levels is incorporated into the best practice templates and is part of the metadata submission to the repository completed by the author(s). For authors to be consistent in their assignment of maturity levels, there may need to be more descriptive details of each maturity level. These could, for example, include assessing the maturity level from auxiliary information such as details about the review process (open review, number of comments received, number of experts that reviewed the document, …) and other factors. This is still under consideration.

Another important innovation of the repository is the introduction of templates to create greater uniformity in the formats and descriptions of best practices; this will be instrumental for improving technological enhancement for the whole system, as described above. The templates offer a suggested structure for best practice documents as well as a comprehensive metadata sheet. At present three templates are offered (sensors; ocean applications, data management) and more are under development. Depending on their subject and/or domain of relevance, the templates include different elements. The OBPS sensor template, for example, includes sections for calibration and deployment. Through such templates, best practice providers will be able to take advantage of automatic ingest of the metadata and document content into the repository with just one upload click. Concurrently, digital technologists will have a more workable and predictable foundation to further enhance the system with new features and components.

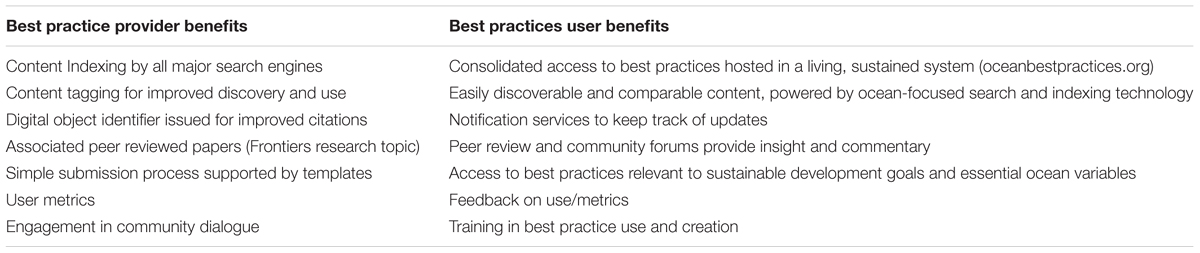

The many benefits that the repository offers to both best practice providers and users are listed in Table 2.

Peer Review Process

Peer review is an important part of broadening acceptance of methods. The OBPS will accept submissions from a wide base of practitioners and, given personnel capacity, undertakes light quality checks to ensure content is relevant and intelligible; the author is still responsible for the accuracy of content. Across ocean sciences, peer review of methods is done in different ways by different programs and networks. For those not engaged in the larger ocean observing networks, generally under the JCOMM umbrella, ways to approach best practice review are unclear. Thus, it was recommended at the Ocean Best Practices Workshop in November 2017 (Simpson et al., 2018) that the OBPS should provide the ability to publish ocean best practices (methods) and related documents in a recognized journal. This resulted in the establishment of a Research Topic (RT), also known as a “Special Issue” entitled “Best Practices in Ocean Observing” in the journal Frontiers in Marine Science - Ocean Observations17 The Frontiers journal was selected because it offers open access to published articles (via an author’s publishing fee), a timely review and publication process, and the possibility for commenting on published research through a commentary tool available at the publishers’ website. Thus, it provides an opportunity for wider exposure of methods documentation. Moreover, best practice provider groups have the opportunity to publish their results in peer-reviewed literature, thus making it recognized for standard research performance metrics and professional advancements. The RT peer review process is rigorous and is an element of best practice community review.

Other methods of community peer review are also part of the recognition process. Large networks such as the Global Ocean Ship-based Hydrographic Investigations Program (GO-SHIP) provide internal peer review by their experts. Projects such as FixO3 and JERICO in Europe have done peer review using project participants and IOOS in the United States uses program participants and panels. For EOVs, GOOS Panels18 are also assessing review procedures to identify “recognized” methods that support the monitoring of EOVs. Best practices that have undergone these reviews or publication in the RT are identified as peer reviewed in the repository.

Training and Knowledge Transfer

Adoption of best practices is an increasing and pressing concern of global science. For example, the number of scientists involved in ocean acidification research has increased rapidly over the past few years due to the urgent need to better understand the effects of changes in carbonate chemistry on marine organisms and ecosystems. It then became necessary to establish common procedures recognized by experts for the measurement of carbonates, in particular in order to educate inexperienced young scientists entering this field of study (Riebesell et al., 2011). In other words, best practices form a means to transfer techniques and approaches from experts to new practitioners in developed and developing countries (Bax et al., 2018).

Within OBPS, capacity development and training is being implemented through the IOC OTGA19 operated by IODE in collaboration with other training efforts in the ocean observing community (Miloslavich et al., 2018b). The IODE was certified as a Learning Services Provider in April 2018 (ISO 29990). The training is initially planned as a series of in-person courses consistent with the OTGA Regional Training model (IODE/IOC, 2016), which will cover the use of best practices on sensors, applications, data management and other topics. Courses will be offered in response to stated community needs. The classes benefit the individual participants and become more valuable if the participants become trainers. To that end, train-the-trainer courses will be offered. The repository accepts videos for training with the longer-term goal of creating a library of remote best practice training resources that would be used in a blended training environment (personal and virtual). Video training on the use of the repository is in the planning stage.

In addition to the courses directly linked to the OBPS, summer schools based on ocean observing methods and other opportunities will promote the system and encourage students, technicians and scientists to use best practices and provide feedback to the best practice providers.

Implementation Examples

The discussion of methodologies can become very abstract to non-specialized practitioners. Concrete illustrations of the creation, adoption and routine employment of best practices are necessary. For this paper, three practices along the value chain have been selected: (1) a HAB assessment product (see section “Harmful Algal Bloom (HAB) Forecast Services”); (2) guides for Eulerian platforms (see section “Global Eulerian Observatories Networks”); and (3), a sensor best practice to measure oxygen in the ocean (see section “Oxygen Data Accuracy for Sensors on Argo Floats”). In addition, the observations monitoring (see section “Assessing the Performance of Ocean Monitoring Systems”) as well as the quality assurance and quality control (QA/QC) of data (see section “Quality Assurance/Quality Control”) are crosscutting issues that are also included in this section.

Harmful Algal Bloom (HAB) Forecast Services

Harmful Algal Blooms occur when colonies of algae grow out of control and produce toxic or harmful effects on people, fish, shellfish, marine mammals and birds. The Irish Marine Institute HAB forecasting bulletin is an example of a successful application and documented best practice developed to actively support end-user aquaculture farm management decisions. Other HABs reports are issued routinely for the Gulf of Mexico20, the Great Lakes and California in the United States and through CMEMS for Europe21.

The HAB forecast is created using in situ ocean observations, numerical modeling and remote sensed satellites observations to create useful science-based products that are visualized as maps and plots. This is a good example of an application reaching across the entire value chain from observations to providing a product to an end user. For the end-product, the forecast and associated risk is determined by a local expert to provide a coherent national/regional early warning system based on best practices across a range of methodologies.

Searching for “Harmful Algal Bloom” reveals currently22 58 documents in the oceanbestpractices.org repository. Twenty-four of those documents were published between 2015 and 2018 and are distributed between local/regional practices23 and global IOC manuals and guides. Authors for the documents are generally experts in their fields either from academia or members of a local government. An exemplar of what is done by the Marine Institute of Ireland for their HAB forecasting bulletin has been entered into the OBPS repository (Leadbetter et al., 2018).

There are a number of challenges in developing the HAB forecasting bulletin. The first is that, because of the complexity of HABs, there is no single “best” practice at the global level, i.e., regional circumstances matter. (Anderson et al., 2018) The OBPS repository, by providing broad access to community practices used to predict HAB dynamics, offers the potential for productive improvements in the forecast capabilities regionally.

Global Eulerian Observatories Networks

The Eulerian (also known as moored or fixed point) observatory infrastructure is moored to the sea floor but may reach upward to/across the ocean surface. There is great variability in the design and implementation of these observatories in terms of both technologies and methods (Coppola et al., 2016). The infrastructure can include very simple to complex/power-hungry sensors supporting a wide range of marine disciplines. Real-time data access can be available from cabled sites or via surface telemetry buoys.

The management of individual Eulerian observatories is primarily in national (institutional) hands but multinational consortia exist that coordinate the infrastructure for regionally optimized observing approaches, such as the European EMSO-ERIC (formerly FixO3), Tropical Arrays (e.g., PIRATA) and Regional Alliances such as IMOS. Driven by the wide variability, almost all consortia have created their “own” best practices for sensors, infrastructure, and data that are tailored to their needs. Many of them are based on field experience and personal and institutional expertise.

Given the national or consortia driven operation of the infrastructure, Eulerian observatories documentation is highly fragmented. Under the umbrella of the global Eulerian observatory network OceanSITES, community agreement has been achieved for selected best practice segments such as data dissemination. The OceanSITES data policy and the OceanSITES NetCDF data file format and file dissemination are important achievements. However, even for the data dissemination, some of the biological and biogeochemical data that need complex, comprehensive documentation on methods and agreement from a global community have not converged (e.g., sediment trap data).

Searching the OBPS repository for Eulerian observatories related keywords24 Eulerian (19), OceanSITES (27), FIXO3 (7), Mooring (151), PIRATA (11), RAMA (9), TPOS (3), TOGA-TAO (5), EMSO (5), EuroSites (2), and OOI (7) revealed the existing documentation (number of documents given in brackets). This is a major achievement of the repository as a similar search would not be possible with other search engines. Another benefit of the OBPS is that best practices can be paired with other documentation that deal with generic techniques (e.g., conversion of acoustic backscatter intensity into target strength) relevant to Eulerian observatories in order to produce a broad harmonization of applicable practices.

In order to best leverage the investments in Eulerian observatories, regional and global coordination is mandatory. The integration is closely linked to appropriate best practices and their documentation regarding sensor handling, analysis techniques, or data reduction. This includes for example, data from sediment traps (carbon export), air-sea fluxes measured by the surface buoys, or all types of quality control data. With increasing maturity of sensors (e.g., pH, pCO2, zooplankton imagery) and emerging techniques (e.g., ‘omics’), new best practices will need to be created. Documentation of these practices in the repository will support enhanced harmonization across the Eulerian observatories networks on a regional to global scale.

Oxygen Data Accuracy for Sensors on Argo Floats

Accuracy for oxygen sensors has been improved during the last decade through the efforts of the Argo-oxygen community (Thierry et al., 2018b). The main advances came from intensive characterization of oxygen sensors by different groups (Bittig et al., 2018) and the creation of documented best practices. The target that was set at the OceanObs’09 for scientific exploitation (1 μmol kg-1) for open ocean studies (Gruber et al., 2010) can now be achieved if full attention is given to the recommendations on sensor calibration (pre- and/or post-deployment) as well as sensor performance validation during deployment, e.g., by in-air referencing (Bittig and Körtzinger, 2015). Searching for “oxygen optode” and “Argo” currently25 in the OBPS repository result in 16 documents.

Thanks to the Argo community work, three important practices have been documented: the calibration protocol for optodes26 (Moore et al., 2009) performed by manufacturers has been enhanced toward a multi-point calibration procedure27; a data processing and qualification chain has been defined in a best practices manual (Thierry et al., 2018a); and a guide has been written for the mounting of sensors on Argo platforms to enable in-air sampling (Bittig et al., 2015)28. Where it can be used, the in-air sampling constrains the oxygen optode in situ drift over time (Bushinsky et al., 2016; Bittig et al., 2018). In this case, the relation between the scientific community and industry (e.g., Aanderaa, Sea-Bird, NKE) allowed improvement of the technology in order to satisfy the recommended best practices (Bittig et al., 2015). Optode in-air observations throughout the Argo float deployment are the most recent best practice recommendations proposed by the Argo community (Bittig et al., 2015) and they considerably improve accuracy (Bittig et al., 2018) and are a key to achieving the OceanObs’09 goal for scientific exploitation. The best practices have been contributed to the OBPS to give them better visibility to the broader community.

Assessing the Performance of Ocean Monitoring Systems

There is a need to optimize the investments in ocean observing and to understand how marine monitoring data and information can be effective and reliable for developing societally relevant products. The first community-based best practice for monitoring systems that incorporates end-user products has been developed by the European Marine Observation and Data Network29. The practice, called a “Sea-Basin Checkpoint”30, produces an assessment of monitoring systems at the scale of the European Seas. An essential component of the Sea-Basin Checkpoint framework is the definition of assessment criteria and their measurable indicators.

A unique Checkpoint assessment best practice was developed for three European sea basins (North Atlantic, Mediterranean Sea, and Black Sea). The results for these basins demonstrated that the Checkpoint assessment is feasible and has led to a promising methodology (best practice). During the development, several challenges were encountered in creating the best practice. One was the establishment of a proper end-user product specifications and the evaluation of the monitoring adequacy on the basis of comprehensive indicators (Pinardi et al., 2017). Another challenge was to have a sufficient number of end-user products to estimate the data and information adequacy across application sectors.

In the short term, the Sea-Basin Checkpoint assessment framework has to gain acceptance by a larger community. The methods for the Mediterranean Checkpoint have been documented in the OBPS repository (Manzella et al., 2017) to encourage review and dialogue of these methods. In this manner, the OBPS becomes one of the elements for expanding monitoring from a scientifically based network to an operational system.

Quality Assurance/Quality Control

There are two elements to quality management: quality assurance and quality control. Quality assurance can be defined as “part of quality management focused on providing confidence that quality requirements will be fulfilled.” Quality control can be defined as “part of quality management focused on fulfilling quality requirements.” While quality assurance relates to how a process is performed or how a product is made, quality control is more the inspection aspect of quality management31.

The metrological traceability of ocean measurements is an important element of QA/QC. Too often, operators of platforms fully rely on calibrations carried out by manufacturers and are not able to carry out independent quality checks and changes as in situ conditions are not accounted for. This is due to a lack of agreed upon methods across different observing programs. Harmonizing QA/QC procedures for the collected data across different observational programs is essential.

The best practice creation process at a component or system level starts with documenting all integration, design details and routine maintenance procedures. It is also critical to document real-time data QA/QC. In formulating quality control best practices, the breadth of the challenge requires many detailed manuals, each focused on a different facet of quality control32. Over the last decade, many such manuals were created and peer reviewed. These are all available in the OBPS repository.

The implementation of this quality assurance strategy on a global level will need the support and concurrence of international organizations such as GOOS, IODE, and JCOMM. As quality control harmonization progresses, the FAIR compliance of the OBPS repository will simplify and motivate coherent discussions and help consolidate quality control approaches across the observing community.

Beyond Implementation Examples

This section has shown that OBPS addresses many aspects of the data life cycle from the point of observation all the way through data products and services, providing needed visibility for these best practices. The OBPS is a living system and contains many practices that can facilitate interoperability and coordination across networks. A challenge for the OBPS project is to convince the ocean community of the benefits of harmonizing the processes and outcomes of ocean observing.

Future Vision for Ocean Best Practices

To enable broad adoption of best practices, advances in technology play an important role as well as other considerations such as community engagement (involving providers and users of observation data), governance and training/outreach. This section will look at potential advances during the next decade and then address how the management of methodological expertise and the development of best practices should evolve to serve the ocean observing community.

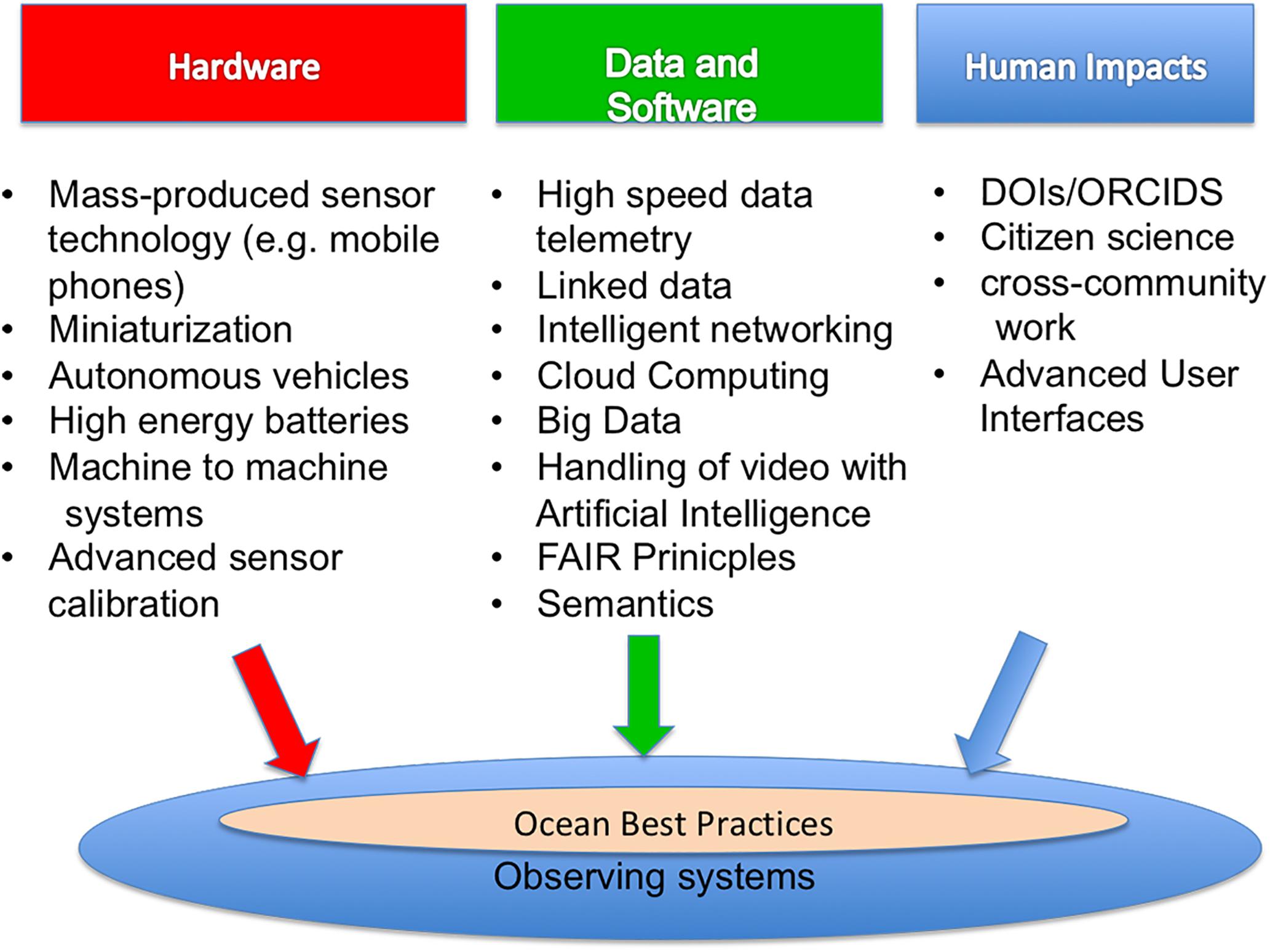

The Evolving Ocean Observing

Virtually all of the prominent advances expected in the coming decade contribute to the vision of “a truly global ocean observing that delivers the essential information needed for our sustainable development, safety, well being and prosperity”33. Selected present and future developments across the value chain that will drive future best practices are shown in Figure 4. They can be grouped into three categories: hardware, data and software, and human factors and frequently feature exchanges with other disciplines. For hardware and software implementation in ever more complex scenarios, standards and best practices for engineering are vital assets for upcoming generations. To illustrate, consider that oceanographers have faced three persistent technology-related challenges over the past half-century: limited electrical power, challenging data telemetry and sensor calibration (predeployment and during in situ operations). Practices relating to power usage and communications optimization are many times done by individual programs and benefits from this work would be gained by making such methodologies more broadly available. Improving the transfer of technology from other fields such as batteries from electric vehicles and sensors from mobile phones will expand options for observing systems. With such transfers, the adaptation of technology will need guidelines and methods that are adoptable by multiple organizations.

Figure 4. Advances in the next decade will enhance ocean observing and impact best practices evolution.

As seen in Figure 4, software advances such as cloud computing, the use of artificial intelligence and others will need to be supported by a new generation of documented methods. As an example, advances should occur in the seamless integration of value chain elements leading to integrated ocean observing products. Linkages across portions of the value chain are being implemented through maturing standards such as the OGC sensor web enablement (del Río et al., 2017; Buck et al., 2019). In addition, integration across disciplines and marine data types (e.g., bathymetry, benthic imagery, ‘omic analyses,’ expanded biological and ecosystem monitoring) will be increasingly important (Brooke et al., 2017; Picard et al., 2018; Przeslawski and Foster, 2018). As the Internet of Things emerges, linkages across systems that are remotely and autonomously configurable will increase sensor-to-sensor networking and the needs for more advanced quality control (QC) processes.

There will also be an evolution to field-capable real-time QC. This needs to include a continued and expanded international effort to develop stable, broadly used QC standards. Manufacturers will begin implementing real-time QC processes and data flagging embedded within their sensors and field components (Bushnell, 2017), so that data can be more easily integrated. OBPS will need to support these and other advances in the coming years.

OBPS Evolution

As discussed above, the growing fields of ocean research, operational oceanography and ocean services will all require an expanded set of best practices that should be guided by international consensus. Aligned with this, the OBPS has the opportunity to become a more active component in such consensus building by offering support throughout the life cycle of promising methods. This may involve the creation of “pilot” or “demonstration” projects to illustrate the best practice (and standards) lifecycle.

Ocean Best Practices System is investigating the inclusion of full text standards in the repository. Collaboration with recognized standards organizations, through the creation of working groups, will be a necessary part of this effort and will also lead to improved coherence.

Another major point of evolution is centered on the integration of best practices in the OBPS across the ocean value chain. To enable this, the inputs and outputs for each method in the system would need to include standardized (and machine readable) links between them. This would enable users to discover networks of methods across disciplinary and operational boundaries, supporting truly integrative product creation. Such linkages can be complex as seen in the HABs illustration case in Section “Harmful Algal Bloom (HAB) Forecast Services.” The linkages are not one to one, but many to many as the woven threads of a fabric, paralleling development of the data fabric concept under the Research Data Alliance34. Based on the current ability of the OBPS to create interlinkages across documents, the application of structured document templates, more advanced natural language processing and ontologies tailored to the OBPS requirements will enable semi- or fully automated solutions for this task.

Repository

Benefiting from the developments in Section “OBPS Evolution,” the repository will expand its range of services and provide interfaces with resources in other infrastructures in the coming years. The descriptions of best practices through its associated metadata will be expanded and standardized to further improve search and access. Further, existing templates will be updated to render their contents more machine actionable. The repository will also develop approaches to link methods to any data, software or code they reference. For example, persistent Uniform Resource Locators (URLs)35 linking methods to reference data sets hosted in trusted archives will become a common feature among best practices. Further, dated links to version controlled Github repositories36 and/or Jupyter notebooks37 will provide software and process connectivity as well as reproducibility of software executable processes.

Finally, development of a practice is a time-consuming process (Przeslawski and Foster, 2018). To approach this more effectively, alternative media forms such as using video recording and data capture will be considered by the OBPS to enhance the documentation process. The repository will thus feature video embedding resources for content hosted through partner projects or via widely available social media systems that assure the necessary content for practices persists.

Peer Review

A process to identify preferred practices for defined applications and/or scenarios is a necessary part of the OBPS evolution. Currently, GOOS together with OBPS are developing a process to identify methods suitable to measure and report on the EOVs. To complement this review model, OBPS is exploring crowd-sourcing approaches to assess community support and adoption for methods in the system. Social media functionality including up- or down-voting coupled with commenting has had remarkable success in web platforms such as StackOverflow38. Indeed, several academic journals have incorporated forms of community review and hybrid models (Walker and Rocha da Silva, 2015). OBPS is exploring such hybrid systems to maximize community involvement, including through the Frontiers in Marine Science Research Topic on Best Practices in Ocean Observing. This Frontiers Research Topic forum is free to all for discussions of methods and comments on papers published in the journal. In the coming years, the aim is to pilot these possibilities and implement a consolidated approach for an expanded peer review model to help detect, test, validate, and ultimately update best practices as they emerge from the OBPS repository.

Training Modalities and Implementations

Expanding the use of common practices by new or expanded ocean research and application interests is a priority of the OBPS. As mentioned earlier, the system aims to support students, professionals working in cross-disciplinary collaborations and educators Looking forward and to fulfill its global mission, the OBPS must prioritize adoption of new techniques in training and capacity building capable of reaching across regions, cultures, and resources. The current OTGA approach of drawing upon ocean observation experts working with educators will be expanded. Moving beyond the traditional in-person training programs, OBPS will incorporate other community efforts such as online, multimedia-based training solutions that have been produced by ocean projects for their staff and others39. The OBPS will use similar web-based approaches to multiply the reach of workshops and other events/programs such as summer schools well beyond those that have the resources to be present in person A similar effort for summer schools will provide a long-term community resource. The next stage of training using OBPS content will leverage the now refined modality of the Massive Open Online Course (MOOC)40. Their effectiveness has already been demonstrated by organizations such as IMOS, which has developed a MOOC focused on marine scientists for learning about ocean observing and the use of marine data41 (Lara-Lopez and Strutton, 2019). Configuring high-quality MOOCs for different user groups will be an immense asset over time, consistently returning rewards that will soon outweigh its initial costs. These types of courses should also be configured as education content for university training and summer schools.

Moving beyond traditional classroom and video methods, a range of new tools such as visual immersion techniques as well as the use of three-dimensional computer-aided-design (CAD) drawings is envisioned42. There are also training opportunities to use hands-on sensor “models” created by 3D Printing (Bogue, 2016). All of these may be envisioned as some of the next steps for far-reaching training solutions.

Engagement, Outreach, and User Support

The value of any system is contingent on its relationship to its user community and scaled by the degree of its adoption. Thus, the next decade OBPS development will be accompanied by community outreach and engagement activities to make the OBPS a system that users integrate into their routine work. Visibility for ocean best practices from Google and other search engines is important. This involves general technical approaches such as search engine optimization43, as well as building relationships with organizations and projects such that the OBPS will become integrated in their sites, increasing its exposure and connectivity in a more customized fashion.

At a finer level, interfaces will be created to report on user engagement with individual documents. A dashboard will be integrated into the OBPS user experience to convey information on how many times a document has been accessed, viewed, downloaded, and by what kind of user44. To move forward, a series of metrics will be defined for a best practice that could include the number of citations, the number of community “upvotes” and user feedback. This would complement a more formal endorsement process that would be done by panels of experts, providing submitters with more comprehensive insight into how their methods are being received.

While building the first instance of the OBPS, our experience at international workshops and town halls has shown that there needs to be an active user outreach effort, as many users are typically focused on methods most prevalent in their institution or immediate community of collaborators. Indeed, some may not even be aware of the concept of regional or global best practices. In response, we will continue to represent the OBPS at larger oceanography and science conferences, to demonstrate how the system can expand methodological awareness and can benefit oceanographers and others in their work. In addition, there will be sessions at specialty workshops focusing on ensuring the OBPS suits specialist needs. Less formally, monthly newsletters and reports on progress will continue to be circulated both for outreach and community building.

Governance

The long-term functionality of the OBPS will be sustained as a project within IOC/UNESCO, complemented by partnerships with major ocean observing organizations. Naturally, this model for sustainability and advancement requires an effective governance strategy that preserves, yet evolves, the system and its policies. This is particularly important as the founders of the OBPS transfer and distribute decision making to a wider group of custodians. Further, the system’s governance should also allow it to easily adapt to major international initiatives such as the UN Sustainable Development Goals as well as the UN Decade of Ocean Sciences for Sustainable Development. This will involve international collaboration and innovations to meet the challenges of the next decade and beyond.

Within the ocean community, a number of governance models could exist as either top-down or bottom-up. Ocean research, as mentioned earlier, generally operates bottom-up. Service and product organizations generally work top-down. As the community moves towards more opeartional ocean products in addition to supporting research, the OBPS must have adaptable governance that supports both bottom-up and top-down. For example, the governance needs to recognize the engineering challenges such as inclusion of BPs used for system design and optimization. The OBPS should also include societal and ethics factors impacting ocean observing. One example is to include practices that support how to do a cost/benefit analysis. Another is to include practices on advice and methodologies for obtaining research authorizations, navigation rights, legal status of seabed resources or ship passage, conservation and management of marine living resources, etc. The use of animals in research requiring ethics committee approval is a subject that could be shared as best practices. Although these multiple aspects may differ from one country and continent to another, a centralized location that provides access to best practices of the complexity of these social aspects of ocean observation would benefit all communities.

Through the governance approach above, the OBPS will be able to support the large and growing diversity of scientific and societal needs faced by ocean observers and users.

Summary and Recommendations

In the sections above, key capabilities have been identified that would make a robust environment for the development, promotion, and adoption of best practices by multiple ocean communities. This section distills the above sections into a set of recommendations that we believe will allow the OBPS to evolve to meet future needs. The order of the recommendations follows the order in the above text and is not necessarily the order of priority. The implementation order will come from discussions at OceanObs’19, IOC recommendations, dialogues with experts across the value chain and inputs from the planning and evolution of the UN Decade of Ocean Science for Sustainable Development.

(1) Improving the transfer of technology from other fields will significantly improve the capacities of observing systems. Widely available and accessible guidelines and methods that are adoptable by multiple organizations are needed to support this transfer.

Recommendation: Prioritize support for transfer and mainstreaming of advanced technologies from diverse communities through developing and promoting best practices.

(2) The linking of best practice will support more efficient research and operations product development. The challenge will be to have experts and best practice creators to help others understand the impacts of selecting one best practice over another.

Recommendation: Create, optimize, and implement methods to link best practices across the value chain working with community experts and provide metrics and reports of the impacts on observations, modeling and end-user applications.

(3) There will be an evolution to field-capable real-time QC. With the growing use of autonomous systems and Internet of Things networking, there will be a complex evolution of platforms and sensors with interoperable, field-capable, and real-time QC. Thus, an expanded international effort to develop QC standards and best practices of all types is needed.

Recommendation: The OBPS must develop dedicated capacities to support methods in QC, with the expectation of increasing machine-to-machine communication and (semi-) autonomous operations.

(4) A consolidated terminology resource for ocean activities across the value chain is not yet broadly available. Continued evolution of marine terminology resources, including those that are relevant to oceanography and its applications is needed. These range from sustainable development goals monitoring to genomics to aquaculture applications. The vocabulary expansion can come from words used in best practices contributions or from activities in related communities.

Recommendation: A broader range of terminology resources with relevance to ocean activities needs to be implemented and focused on ocean research and applications.

(5) Increased automation will impact observing practices as well as OBPS operations. To keep pace, there is a need for automated or semi-automated solutions for contributing, quality controlling, reviewing and updating a special collection of best practices to the repository. Networks of autonomous vehicles and interlinked sensors are thus likely to become a virtual stakeholder group in the future of OBPS.

Recommendation: Prepare for automated contributions to the OBPS by co-strategizing with developers of such systems and AI practitioners working with their outputs.

(6) Identifying best practices that are common across EOVs in collaboration with the GOOS Panels will help establish consolidated methods to observe EOVs that can further the creation of science-based products.

Recommendation: With the respective GOOS EOV Panels, identify methods that are suited to delivering one or more EOVs and elevate them to best practices. Identify practices that support multiple EOVs. Then create a compendium of such best practices that can be highlighted and prioritized for community input and refinement.

(7) Transferring knowledge stored in the OBPS through training will evolve through the use of social media and more advanced communication technologies and platforms.

Recommendation: Create a long-term strategy for training using OBPS content, leveraging the advances in social media and technology, while engaging education professionals to design effective and regionally/culturally tailored course content.

(8) Environmental sustainability as well as interactions with societal needs and legal conventions will have important impacts on the future of ocean observing. To address this, the OBPS must link to global and regional policy frameworks such as the Sustainable Development Goals, the EU’s Marine Strategy Framework Directive and ocean-focused legal frameworks such as the UN Law of the Sea Convention (UNCLOS).

Recommendation: Engage communities in ocean and marine policy and law, offering the use of the OBPS to facilitate development and/or share best practices in the domain. In parallel, enhance OBPS technologies such that all documents can be more easily linked to applicable laws or policy directives and indicators. Further, and in preparation for the Decade of Ocean Science for Sustainable Development, focus effort on integration with systems used to advance and report on the UN Sustainable Development Goals.

These are selected recommendations for developments during the next decade. They are not complete and will certainly evolve as experience is gained in working with the OBPS and the ocean observing community at large. We invite all readers to contribute to the process ahead, ensuring the OBPS can suit their community’s needs.

Glossary and Definitions

Controlled vocabularies: A controlled vocabulary is an organized arrangement of words and phrases used to index content and/or to retrieve content of documents through browsing or searching. It typically includes preferred terms in professional or common usage. The purpose of controlled vocabularies is to organize information and to provide terminology to catalog and retrieve information (extracted from http://www.getty.edu/research/publications/electronic_publications/intro_controlled_vocab/what.pdf).

Copyright: is a law that gives the owner of a work (like a book, movie, picture, song or website) the right to say how other people can use it (see also intellectual property).

Eulerian observations: is a way of looking at fluid motion that focuses on observations at a specific location in the space through which the fluid flows as time passes.

Faceted search: Faceted search is a technique that involves augmenting traditional search techniques with a faceted navigation system, allowing users to narrow down search results by applying multiple filters.

Intellectual property: refers to the ownership of an idea or design by the person who came up with it. It is a term used in property law. It gives a person certain exclusive rights to a distinct type of creative design, meaning that nobody else can copy or reuse that creation without the owner’s permission; sometimes abbreviated “IP” (see also copyright).

Linked data: is a method of publishing structured data to the web such that it can be discovered and accessed by distributed systems and connected to related data or information through semantic references.

Machine learning: is Artificial Intelligence that enables a system to learn from data rather than through programming; the scientific study of algorithms and statistical models that computer systems use to effectively perform a specific task without using explicit instructions, relying on models and inference instead.

Metadata: Data that describes other data. Meta is a prefix that in most information technology usages means “an underlying definition or description.” Metadata summarizes basic information about data, which can make finding and working with particular instances of data easier; metadata may also be applied to descriptions of methodologies.

Natural language search: is a computerized search performed by parsing a query phrased in everyday language, where the user would phrase questions as though they were talking to another human.

Observing network: is the group that coordinated a family of observing devices that operate along a rather similar technology (e.g., Spray glider, Slocum glider, Seaglider,…).

Observing system: is a system of ocean observing elements – including devices (organized in observing networks), data integration, data product generation and dissemination, requirements setting, science approach for the requirement set, etc.

Ontology: A formalized, machine-readable, and logically consistent representation of human knowledge, typically organizing phenomena into a hierarchy of categories and their instances. Categories and instances are connected through logical relationships which can be understood by machines.

Open data: Data which is freely available to everyone to use and re-publish as they wish, without restrictions from copyright, patents or other mechanisms of control45.

Open access: refers to research outputs which are distributed free of cost or other barriers, and possibly with the addition of a Creative Commons License to promote reuse.

Open source: is a term denoting that a product includes permission to use its source code, design documents, or content. It most commonly refers to the open-source model, in which open-source software or other products are released under an open-source license as part of the open-source-software movement. Use of the term originated with software, but has expanded beyond the software sector to cover other open content and forms of open collaboration.

Peer review: is a process by which a scholarly work (such as a paper or a research proposal) is checked by a group of experts in the same field to make sure it meets the necessary standards before it is published or accepted.

Performance metrics: Metrics are quantitative measures designed to help evaluate research and operations outputs. Metrics are intended to be used in conjunction with qualitative measures such as peer review.

Repository: is an archive of documentation or objects; in this paper refers to the OceanBestPractices repository hosted at IOC/IODE of UNESCO.

Semantics: means the meaning and interpretation of words, signs, and sentence structure; the branch of study within linguistics, philosophy, and computer science which investigates the nature of meaning and its role in practical applications.

Standard operating procedures (SOP): is a set of step-by-step instructions compiled by an organization to help workers carry out complex routine operations.

Standards: are documents of requirements, specifications, guidelines or characteristics that can be used consistently to ensure that materials, products, processes and services are fit for their purpose.

Tagging: in information systems, a tag is a keyword or term assigned to a datum or information content entity (such as a digital image, word or phrase in an electronic document or a computer file). This kind of metadata describes an item or subitem and allows it to be found again by browsing or searching.

Text mining: is the process of deriving information from text. The overarching goal is to turn text into data for analysis, via application of natural language processing and analytical methods.

Trusted: Regarded as reliable or truthful; able to be depended on for an application or analyses; can also be applied to relations between organizations or people.

Value chain: can be defined as the set of value-adding activities that one or more organizations perform in creating and distributing goods and services. In terms of ocean observing, the value chain approach can be applied to consider societal benefits of the data and assess the value of data and data features.

Vocabulary: A list or collection of words or of words and phrases usually alphabetically arranged and explained or defined.

Author Contributions

JP, MBu, LC, JK, PB, FP, PS, MBa, FM-K, CM-M, PP, CCh, JH, EH, and RJ conceived, developed, and wrote the bulk of the manuscript. EA, MBe, HB, JJB, JBl, BB, RB, JBo, EB, DC, VC, ML, AC, HC, CCu, ED, RG, GG, VH, SH, RH, SJ, AL-L, NL, AL, GM, JM, AM, EM, MN, SPe, GP, NP, SPo, RP, NR, JS, MTa, HT, MTe, TT, PT, JT, CW, and FW contributed significant ideas and text to the article.

Funding

The Ocean Best Practices project has received funding from the European Union’s Horizon 2020 Research and Innovation Program under grant agreement no: 633211 (AtlantOS), no. 730960 (SeaDataCloud) and no: 654310 (ODIP). Funding was also received from the NSF OceanObs Research Coordination Network under NSF grant 1143683. The Best Practices Handbook for fixed observatories has been funded by the FixO3 project financed by the European Commission through the Seventh Framework Programme for Research, grant agreement no. 312463. The Harmful Algal Blooms Forecast Report was funded by the Interreg Atlantic Area Operational Programme Project PRIMROSE (Grant Agreement No. EAPA_182/2016), and the AtlantOS project (see above). PB acknowledges funding from the Helmholtz Programme Frontiers in Arctic Marine Monitoring (FRAM) conducted by the Alfred-Wegener-Institut. JM acknowledges fundng from the WeObserve project under the European Union’s Horizon 2020 Research and Innovation Program (grant agreement no. 776740). MTe acknowledges support from the US National Science Foundation grant OCE-1840868 to the Scientific Committee on Oceanic Research (SCOR, US) FM-K acknowledges support by NSF Grant 1728913 ‘OceanObS Research Coordination Network’. Funding was also provided by NASA grant NNX14AP62A ‘National Marine Sanctuaries as Sentinel Sites for a Demonstration Marine Biodiversity Observation Network (MBON)’ funded under the National Ocean Partnership Program (NOPP RFP NOAA-NOS-IOOS-2014-2003803 in partnership between NOAA, BOEM, and NASA), and the U.S. Integrated Ocean Observing System (IOOS) Program Office.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer LL declared a past co-authorship and shared affiliation with one of the authors LC to the handling Editor.

Acknowledgments

We acknowledge the contributions of some of the authors (MB, PB, CCh, FM-K, JH, EH, JK, CM-M, FP, JP, PP, NR, and PS) to the Ocean Best Practice Working Group that has been instrumental in building the foundation for the Ocean Best Practice System. We would like to thank LL and ML for their thoughtful contributions as reviewers, which resulted in many improvements in the manuscript. We would like to thank Nina Hall and Brittany Alexander for their support in creating and maturing the Frontiers in Marine Science Best Practices in Ocean Observing Research Topic.

Abbreviations