Mapping Cynodon Dactylon Infesting Cover Crops with an Automatic Decision Tree-OBIA Procedure and UAV Imagery for Precision Viticulture

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Fields and UAV Imagery Acquisition

2.2. Generation of the Digital Surface Model (DSM) and Image Mosaicking

2.3. Ground Truth Data

2.4. Optimum Feature Selection

2.4.1. Image Segmentation and Definition of Object-Based Features

2.4.2. Decision Tree Modeling and Model Evaluation

2.5. Object-Based Image Analysis

2.5.1. OBIA Algorithm Development

2.5.2. OBIA Model Validation

3. Results and Discussion

3.1. Machine Learning Analysis-Features Selected

3.2. Image Analysis

3.2.1. Description of the OBIA-Algorithm Developed Using DT Modeling

- Vine classification: vine objects were automatically identified and classified on the basis of the DSM information, thus avoiding misclassification as a cover crop or weed due to spectral similarity, as described by [9]. Firstly, chessboard segmentation was performed for object generation. Then, the DSM standard deviation feature was used to define "vine candidates", and a subsequent analysis at a pixel level comparing their DSM value with that of the surrounding soil square enabled the refinement of vine object delimitation and classification of the rest of the land covers as not-vineyard. The use of this approach to identify vine objects has great advantages as it prevents errors due to the eventual field slope, and decreases the computational time of the full process, without penalizing the segmentation accuracy [9].

- Inter-row land cover classification: once the vines were identified, the remaining land covers in the vineyard were classified by the following three steps:

- 2.1

- Segmentation: the orthomosaic was segmented with the MRS algorithm using the spectral (R, G, and B) information. MRS is a bottom-up segmentation algorithm based on a pairwise region merging technique involving several parameters (scale, color/shape, smoothness/compaction) definition to subdivide the image into homogeneous objects; plant objects in this research. The values of these parameters were set to 5, 0.3, 0.5, and 0.5 for scale, color, shape, smoothness, and compactness, respectively, to generate objects adjusted to the actual shape of cover crop and weed plants. They were obtained in a preliminary study using a large set of vineyard plot imagery.

- 2.2

- Bare soil thresholding: following the results obtained in the DT analysis, the bare soil objects were first separated from the vegetation (cover crop and C. dactylon) using the ExR index. The automatic selection of the optimal threshold value in each image was carried out by implementing the Otsu method (an iterative threshold approach defined by [77]) in the algorithm according to [78].

- 2.3

- Cover crop and C. dactylon classification: once the bare soil was separated, the remaining objects of the image, corresponding to vegetation, were discriminated and classified using the VEG index based on the DT results. The optimal threshold value to separate cover crop and bermudagrass was automatically obtained in each image using the Otsu method. Therefore, no user intervention was necessary at any stage of the classification.

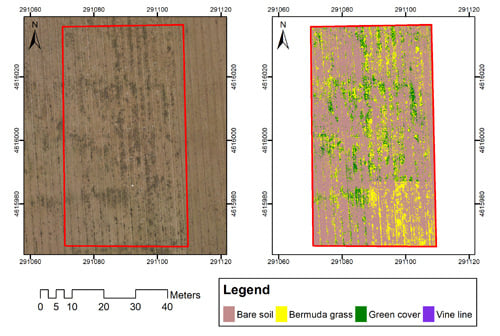

- C. dactylon mapping: a classified map composed of the vines, bare soil, cover crop plants and C. dactylon patches was generated. From the map, the OBIA algorithm identified every vine, bermudagrass and cover crop plant, and their geographic coordinates and surface values were reported.

3.2.2. Evaluation of the DT-OBIA Algorithm for Weed Mapping

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tey, Y.S.; Brindal, M. Factors influencing the adoption of precision agricultural technologies: A review for policy implications. Precis. Agric. 2012, 13, 713–730. [Google Scholar] [CrossRef]

- Arnó, J.; Casasnovas, J.A.M.; Dasi, M.R.; Rosell, J.R. Review. Precision viticulture. Research topics, challenges and opportunities in site-specific vineyard management. Span. J. Agric. Res. 2009, 7, 779–790. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef] [Green Version]

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.S.; Neely, H.L.; et al. Unmanned Aerial Vehicles for High-Throughput Phenotyping and Agronomic Research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Peres, E.; Morais, R.; Sousa, J.J. Multi-Temporal Vineyard Monitoring through UAV-Based RGB Imagery. Remote Sens. 2018, 10, 1907. [Google Scholar] [CrossRef] [Green Version]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [Green Version]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Poblete-Echeverría, C.; Olmedo, G.F.; Ingram, B.; Bardeen, M. Detection and Segmentation of Vine Canopy in Ultra-High Spatial Resolution RGB Imagery Obtained from Unmanned Aerial Vehicle (UAV): A Case Study in a Commercial Vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.I.; Jiménez-Brenes, F.M.; Torres-Sánchez, J.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. 3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications. Remote Sens. 2018, 10, 584. [Google Scholar] [CrossRef] [Green Version]

- Weiss, M.; Baret, F. Using 3D Point Clouds Derived from UAV RGB Imagery to Describe Vineyard 3D Macro-Structure. Remote Sens. 2017, 9, 111. [Google Scholar] [CrossRef] [Green Version]

- Albetis, J.; Duthoit, S.; Guttler, F.; Jacquin, A.; Goulard, M.; Poilvé, H.; Féret, J.-B.; Dedieu, G. Detection of Flavescence dorée Grapevine Disease Using Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2017, 9, 308. [Google Scholar] [CrossRef] [Green Version]

- del-Campo-Sanchez, A.; Ballesteros, R.; Hernandez-Lopez, D.; Ortega, J.F.; Moreno, M.A.; on behalf of Agroforestry and Cartography Precision Research Group. Quantifying the effect of Jacobiasca lybica pest on vineyards with UAVs by combining geometric and computer vision techniques. PLoS ONE 2019, 14, e0215521. [Google Scholar] [CrossRef] [Green Version]

- Di Gennaro, S.F.; Battiston, E.; Marco, S.D.; Facini, O.; Matese, A.; Nocentini, M.; Palliotti, A.; Mugnai, L. Unmanned Aerial Vehicle (UAV)-Based Remote Sensing to Monitor Grapevine Leaf Stripe Disease within a Vineyard Affected by Esca Complex. Available online: https://link.galegroup.com/apps/doc/A533409412/AONE?sid=lms (accessed on 4 December 2019).

- Rey-Caramés, C.; Diago, M.P.; Martín, M.P.; Lobo, A.; Tardaguila, J. Using RPAS Multi-Spectral Imagery to Characterise Vigour, Leaf Development, Yield Components and Berry Composition Variability within a Vineyard. Remote Sens. 2015, 7, 14458–14481. [Google Scholar] [CrossRef] [Green Version]

- Matese, A.; Di Gennaro, S.F.; Santesteban, L.G. Methods to compare the spatial variability of UAV-based spectral and geometric information with ground autocorrelated data. A case of study for precision viticulture. Comput. Electron. Agric. 2019, 162, 931–940. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Adão, T.; Guimarães, N.; Sousa, A.; Peres, E.; Sousa, J.J. Vineyard Variability Analysis through UAV-Based Vigour Maps to Assess Climate Change Impacts. Agronomy 2019, 9, 581. [Google Scholar] [CrossRef] [Green Version]

- Torres-Sánchez, J.; Marín, D.; De Castro, A.I.; Oria, I.; Jiménez-Brenes, F.M.; Miranda, C.; Santesteban, L.G.; López-Granados, F. Assessment of vineyard trimming and leaf removal using UAV photogrammetry. In Precision Agriculture’19; Wageningen Academic Publishers: Wageningen, The Netherlands, 2019; pp. 187–192. ISBN 978-9-08-686337-2. [Google Scholar]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Romero, M.; Luo, Y.; Su, B.; Fuentes, S. Vineyard water status estimation using multispectral imagery from an UAV platform and machine learning algorithms for irrigation scheduling management. Comput. Electron. Agric. 2018, 147, 109–117. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Campos, J.; Llop, J.; Gallart, M.; García-Ruiz, F.; Gras, A.; Salcedo, R.; Gil, E. Development of canopy vigour maps using UAV for site-specific management during vineyard spraying process. Precis. Agric. 2019, 20, 1136–1156. [Google Scholar] [CrossRef] [Green Version]

- Jiménez-Brenes, F.M.; López-Granados, F.; Torres-Sánchez, J.; Peña, J.M.; Ramírez, P.; Castillejo-González, I.L.; De Castro, A.I. Automatic UAV-based detection of Cynodon dactylon for site-specific vineyard management. PLoS ONE 2019, 14, e0218132. [Google Scholar] [CrossRef]

- Gago, P.; Cabaleiro, C.; Garcia, J. Preliminary study of the effect of soil management systems on the adventitious flora of a vineyard in northwestern Spain. Crop Prot. 2007, 26, 584–591. [Google Scholar] [CrossRef]

- Valencia, F.; Civit, J.; Esteve, J.; Recasens, J. Cover-crop management to control Cynodon dactylon in vineyards: Balance between efficiency and sustainability. In Proceedings of the 7th International Weed Science Conference, Prague, Czech Republic, 19–25 June 2016. [Google Scholar]

- Baumgartner, K.; Steenwerth, K.L.; Veilleux, L. Cover-Crop Systems Affect Weed Communities in a California Vineyard. Weed Sci. 2008, 56, 596–605. [Google Scholar] [CrossRef]

- Ingels, C.A.; Bugg, R.L.; McGourty, G.T.; Christensen, L.P. Cover Cropping in Vineyards: A Grower’s Handbook; University of California Cooperative Extension Amador County; Amador County Publication: Amador County, CA, USA, 1998. [Google Scholar]

- Hartwig, N.L.; Ammon, H.U. Cover crops and living mulches. Weed Sci. 2002, 50, 688–699. [Google Scholar] [CrossRef]

- Ripoche, A.; Metay, A.; Celette, F.; Gary, C. Changing the soil surface management in vineyards: Immediate and delayed effects on the growth and yield of grapevine. Plant Soil 2011, 339, 259–271. [Google Scholar] [CrossRef]

- Gómez, J.A.; Llewellyn, C.; Basch, G.; Sutton, P.B.; Dyson, J.S.; Jones, C.A. The effects of cover crops and conventional tillage on soil and runoff loss in vineyards and olive groves in several Mediterranean countries. Soil Use Manag. 2011, 27, 502–514. [Google Scholar] [CrossRef] [Green Version]

- Clark, A. Managing Cover Crops Profitably, Third Edition. Handbook Series Book 9; The Sustainable Agriculture Research and Education (SARE) Program; United Book Press, Inc.: Gwynn Oak, MD, USA, 2012. [Google Scholar]

- Recasens, J.; Cabrera, C.; Valencia, F.; de Castro, A.I.; Royo-Esnal, A.; Torres-Sánchez, J.; Civit, J.; Jiménez-Brenes, J.M.; López-Granados, F. Manejo, dinámica espacio-temporal y detección aérea de rodales de Cynodon dactylon en viñedos con cubierta vegetal. In Proceedings of the XVII Actas Congreso de la Sociedad Española de Malherbología, Vigo, Spain, 8–10 October 2019; pp. 231–236. [Google Scholar]

- Holm, L.R.G.; Plucknett, D.L.; Pancho, J.V.; Herberger, J.P. The World’s Worst Weeds. Distribution and Biology. Available online: https://www.cabi.org/isc/abstract/19776719958 (accessed on 31 October 2019).

- FAO Plant Production and Protection Division: Cynodon Dactylon. Available online: http://www.fao.org/agriculture/crops/thematic-sitemap/theme/biodiversity/weeds/listweeds/cyn-dac/en/ (accessed on 31 October 2019).

- Fontenot, D.P.; Griffin, J.L.; Bauerle, M.J. Bermudagrass (Cynodon dactylon) competition with sugarcane at planting. J. Am. Soc. Sugar Cane Technol. 2016, 36, 19–30. [Google Scholar]

- Judge, C.A.; Neal, J.C.; Derr, J.F. Response of Japanese Stiltgrass (Microstegium vimineum) to Application Timing, Rate, and Frequency of Postemergence Herbicides. Weed Technol. 2005, 19, 912–917. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz-Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [Green Version]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; Mesas-Carrascosa, F.-J.; Peña, J.-M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Serrano, N.; Arquero, O.; Peña, J.M. High-Throughput 3-D Monitoring of Agricultural-Tree Plantations with Unmanned Aerial Vehicle (UAV) Technology. PLoS ONE 2015, 10, e0130479. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.I.; Rallo, P.; Suárez, M.P.; Torres-Sánchez, J.; Casanova, L.; Jiménez-Brenes, F.M.; Morales-Sillero, A.; Jiménez, M.R.; López-Granados, F. High-Throughput System for the Early Quantification of Major Architectural Traits in Olive Breeding Trials Using UAV Images and OBIA Techniques. Front. Plant Sci. 2019, 10, 1472. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yurtseven, H.; Akgul, M.; Coban, S.; Gulci, S. Determination and accuracy analysis of individual tree crown parameters using UAV based imagery and OBIA techniques. Measurement 2019, 145, 651–664. [Google Scholar] [CrossRef]

- Komárek, J.; Klouček, T.; Prošek, J. The potential of Unmanned Aerial Systems: A tool towards precision classification of hard-to-distinguish vegetation types? Int. J. Appl. Earth Obs. Geoinf. 2018, 71, 9–19. [Google Scholar] [CrossRef]

- Ostos-Garrido, F.J.; de Castro, A.I.; Torres-Sánchez, J.; Pistón, F.; Peña, J.M. High-Throughput Phenotyping of Bioethanol Potential in Cereals Using UAV-Based Multi-Spectral Imagery. Front. Plant Sci. 2019, 10, 948. [Google Scholar] [CrossRef] [Green Version]

- Koh, J.C.O.; Hayden, M.; Daetwyler, H.; Kant, S. Estimation of crop plant density at early mixed growth stages using UAV imagery. Plant Methods 2019, 15, 64. [Google Scholar] [CrossRef]

- Gao, J.; Liao, W.; Nuyttens, D.; Lootens, P.; Vangeyte, J.; Pižurica, A.; He, Y.; Pieters, J.G. Fusion of pixel and object-based features for weed mapping using unmanned aerial vehicle imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 67, 43–53. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; López-Granados, F. Quantifying Efficacy and Limits of Unmanned Aerial Vehicle (UAV) Technology for Weed Seedling Detection as Affected by Sensor Resolution. Sensors 2015, 15, 5609–5626. [Google Scholar] [CrossRef] [Green Version]

- López-Granados, F.; Torres-Sánchez, J.; de Castro, A.I.; Serrano-Pérez, A.; Mesas-Carrascosa, F.J.; Peña, J.M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 67. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Fu, T.; Blaschke, T.; Li, M.; Tiede, D.; Zhou, Z.; Ma, X.; Chen, D. Evaluation of Feature Selection Methods for Object-Based Land Cover Mapping of Unmanned Aerial Vehicle Imagery Using Random Forest and Support Vector Machine Classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. Selecting patterns and features for between- and within- crop-row weed mapping using UAV-imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef] [Green Version]

- Hung, C.; Xu, Z.; Sukkarieh, S. Feature Learning Based Approach for Weed Classification Using High Resolution Aerial Images from a Digital Camera Mounted on a UAV. Remote Sens. 2014, 6, 12037–12054. [Google Scholar] [CrossRef] [Green Version]

- Hamedianfar, A.; Shafri, H.Z.M. Integrated approach using data mining-based decision tree and object-based image analysis for high-resolution urban mapping of WorldView-2 satellite sensor data. J. Appl. Remote Sens. 2016, 10, 025001. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Browning, D.M.; Rango, A. A comparison of three feature selection methods for object-based classification of sub-decimeter resolution UltraCam-L imagery. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 70–78. [Google Scholar] [CrossRef]

- De Castro, A.I.; Ehsani, R.; Ploetz, R.; Crane, J.H.; Abdulridha, J. Optimum spectral and geometric parameters for early detection of laurel wilt disease in avocado. Remote Sens. Environ. 2015, 171, 33–44. [Google Scholar] [CrossRef]

- Lu, J.; Ehsani, R.; Shi, Y.; Abdulridha, J.; de Castro, A.I.; Xu, Y. Field detection of anthracnose crown rot in strawberry using spectroscopy technology. Comput. Electron. Agric. 2017, 135, 289–299. [Google Scholar] [CrossRef]

- Lu, J.; Ehsani, R.; Shi, Y.; De Castro, A.I.; Wang, S. Detection of multi-tomato leaf diseases (late blight target and bacterial spots) in different stages by using a spectral-based sensor. Sci. Rep. 2018, 8, 2793. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.Y.; Li, J. Feature-selection ability of the decision-tree algorithm and the impact of feature-selection/extraction on decision-tree results based on hyperspectral data. Int. J. Remote Sens. 2008, 29, 2993–3010. [Google Scholar] [CrossRef]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Vieira, M.A.; Formaggio, A.R.; Rennó, C.D.; Atzberger, C.; Aguiar, D.A.; Mello, M.P. Object Based Image Analysis and Data Mining applied to a remotely sensed Landsat time-series to map sugarcane over large areas. Remote Sens. Environ. 2012, 123, 553–562. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Texture and Scale in Object-Based Analysis of Subdecimeter Resolution Unmanned Aerial Vehicle (UAV) Imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef] [Green Version]

- Kalantar, B.; Mansor, S.B.; Sameen, M.I.; Pradhan, B.; Shafri, H.Z.M. Drone-based land-cover mapping using a fuzzy unordered rule induction algorithm integrated into object-based image analysis. Int. J. Remote Sens. 2017, 38, 2535–2556. [Google Scholar] [CrossRef]

- Sarron, J.; Malézieux, É.; Sané, C.A.B.; Faye, É. Mango Yield Mapping at the Orchard Scale Based on Tree Structure and Land Cover Assessed by UAV. Remote Sens. 2018, 10, 1900. [Google Scholar] [CrossRef] [Green Version]

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Peña, J.M. Assessing UAV-collected image overlap influence on computation time and digital surface model accuracy in olive orchards. Precis. Agric 2018, 19, 115–133. [Google Scholar] [CrossRef]

- McCoy, R.M. Field Methods in Remote Sensing; Canadian Geographer/Le Géographe Canadien; The Guilford Press: New York, NY, USA, 2005. [Google Scholar]

- Peña, J.M.; Gutiérrez, P.A.; Hervás-Martínez, C.; Six, J.; Plant, R.E.; López-Granados, F. Object-Based Image Classification of Summer Crops with Machine Learning Methods. Remote Sens. 2014, 6, 5019–5041. [Google Scholar] [CrossRef] [Green Version]

- Dorigo, W.; Lucieer, A.; Podobnikar, T.; Čarni, A. Mapping invasive Fallopia japonica by combined spectral, spatial, and temporal analysis of digital orthophotos. Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 185–195. [Google Scholar] [CrossRef]

- De Castro, A.I.; Ehsani, R.; Ploetz, R.C.; Crane, J.H.; Buchanon, S. Detection of Laurel Wilt Disease in Avocado Using Low Altitude Aerial Imaging. PLoS ONE 2015, 10, e0124642. [Google Scholar] [CrossRef]

- eCognition Developer 9.2 User Guide; Trimble Geospatial: Munich, Germany, 2017.

- Girolamo-Neto, C.D.; Sanches, I.D.; Neves, A.K.; Prudente, V.H.R.; Körting, T.S.; Picoli, M.C.A.; De Aragão, L.E.O.e.C. Assessment of Texture Features for Bermudagrass (Cynodon dactylon) Detection in Sugarcane Plantations. Drones 2019, 3, 36. [Google Scholar] [CrossRef] [Green Version]

- Baraldi, A.; Parmiggiani, F. An investigation of the textural characteristics associated with gray level cooccurrence matrix statistical parameters. IEEE Trans. Geosci. Remote Sens. 1995, 33, 293–304. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Özdemir, T.; Eyduran, E. Comparison of Chi-Square and Likelihood Ratio Chi-Square Tests: Power of Test. J. Appl. Sci. Res. 2005, 1, 242–244. [Google Scholar]

- Rogan, J.; Franklin, J.; Roberts, D.A. A comparison of methods for monitoring multitemporal vegetation change using Thematic Mapper imagery. Remote Sens. Environ. 2002, 80, 143–156. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Maier, S.W.; Boggs, G.S. Area-based and location-based validation of classified image objects. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 117–130. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.J.; Burgos-Artizzu, X.P.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef] [Green Version]

- Swets, J.A. Measuring the accuracy of diagnostic systems. Science 1988, 240, 1285–1293. [Google Scholar] [CrossRef] [Green Version]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Thomlinson, J.R.; Bolstad, P.V.; Cohen, W.B. Coordinating methodologies for scaling landcover classifications from site-specific to global: Steps toward validating global map products. Remote Sens. Environ. 1999, 70, 16–28. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.I.; López Granados, F.; Jurado-Expósito, M. Broad-scale cruciferous weed patch classification in winter wheat using QuickBird imagery for in-season site-specific control. Precis. Agric. 2013, 14, 392–413. [Google Scholar] [CrossRef] [Green Version]

- Moffett, K.B.; Gorelick, S.M. Distinguishing wetland vegetation and channel features with object-based image segmentation. Int. J. Remote Sens. 2012, 34, 1332–1354. [Google Scholar] [CrossRef]

- Chauhan, B.S.; Singh, R.G.; Mahajan, G. Ecology and management of weeds under conservation agriculture: A review. Crop Prot. 2012, 38, 57–65. [Google Scholar] [CrossRef]

| Field | Area (m2) | Plantation Vine Year | Cover Crop Species | Flight Date | Purpose of Data |

|---|---|---|---|---|---|

| C-16 | 3661 | 1988 | Hordeum vulgare | 1st February 2016 | Algorithm Training (Feature selection) |

| C-17 | 3988 | 1988 | Hordeum vulgare | 24th January 2017 | Algorithm Training (Feature selection) |

| A-16 | 2663 | 2015 | Festuca arundinacea | 1st February 2016 | Algorithm Validation |

| B-16 | 3863 | 2015 | Hordeum vulgare | 1st February 2016 | Algorithm Validation |

| Vulpia ciliata | |||||

| Bromus rubens | |||||

| Bromus hordeaceus | |||||

| Festuca arundinacea | |||||

| Medicago rugosa |

| Category | Name | Equation a | Adapted from |

|---|---|---|---|

| Object Spectral | |||

| Mean | --- | ||

| SD | --- | ||

| Mode | Most common value | ||

| Vegetation indices | |||

| Excess green | [51] | ||

| Excess red | [52] | ||

| Excess green minus excess red | [53] | ||

| R-G | [54] | ||

| Color index of vegetation | [55] | ||

| Green vegetation index | [56] | ||

| Vegetative | [57] | ||

| Combination 1 | [58] | ||

| Textural features | |||

| GLCM Homogeneity | After [50] | ||

| GLCM Contrast | After [50] | ||

| GLCM Dissimilarity | After [50] | ||

| GLCM Entropy | After [50] | ||

| GLCM Ang. 2nd moment | After [50] | ||

| GLCM StdDev | After [50] | ||

| GLCM Correlation | After [50] | ||

| Parcela C-16 | Parcela C-17 | |

|---|---|---|

| Fatures selected | % G2 | % G2 |

| ExR | 59 | 92 |

| VEG | 41 | 8 |

| Vineyard | Accuracy Statistics | |||

|---|---|---|---|---|

| GA * (%) | CCCR (%) | Area under the ROC Curve | RMSE | |

| C-17 | 97.6 | 96.6 | BS: 0.98 CD: 0.98 CC: 0.95 | 0.16 |

| C-16 | 98.4 | 98.0 | BS: 0.99 CD: 0.99 CC: 0.99 | 0.12 |

| Vineyard | Accuracy Statistics | |

|---|---|---|

| OA * (%) | C. dactylon UA (%) | |

| A-16 | 89.82 | 98.00 |

| B-16 | 84.03 | 98.50 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Castro, A.I.; Peña, J.M.; Torres-Sánchez, J.; Jiménez-Brenes, F.M.; Valencia-Gredilla, F.; Recasens, J.; López-Granados, F. Mapping Cynodon Dactylon Infesting Cover Crops with an Automatic Decision Tree-OBIA Procedure and UAV Imagery for Precision Viticulture. Remote Sens. 2020, 12, 56. https://doi.org/10.3390/rs12010056

de Castro AI, Peña JM, Torres-Sánchez J, Jiménez-Brenes FM, Valencia-Gredilla F, Recasens J, López-Granados F. Mapping Cynodon Dactylon Infesting Cover Crops with an Automatic Decision Tree-OBIA Procedure and UAV Imagery for Precision Viticulture. Remote Sensing. 2020; 12(1):56. https://doi.org/10.3390/rs12010056

Chicago/Turabian Stylede Castro, Ana I., José M. Peña, Jorge Torres-Sánchez, Francisco M. Jiménez-Brenes, Francisco Valencia-Gredilla, Jordi Recasens, and Francisca López-Granados. 2020. "Mapping Cynodon Dactylon Infesting Cover Crops with an Automatic Decision Tree-OBIA Procedure and UAV Imagery for Precision Viticulture" Remote Sensing 12, no. 1: 56. https://doi.org/10.3390/rs12010056