Abstract

Ductile failure of structural metals is relevant to a wide range of engineering scenarios. Computational methods are employed to anticipate the critical conditions of failure, yet they sometimes provide inaccurate and misleading predictions. Challenge scenarios, such as the one presented in the current work, provide an opportunity to assess the blind, quantitative predictive ability of simulation methods against a previously unseen failure problem. Rather than evaluate the predictions of a single simulation approach, the Sandia Fracture Challenge relies on numerous volunteer teams with expertise in computational mechanics to apply a broad range of computational methods, numerical algorithms, and constitutive models to the challenge. This exercise is intended to evaluate the state of health of technologies available for failure prediction. In the first Sandia Fracture Challenge, a wide range of issues were raised in ductile failure modeling, including a lack of consistency in failure models, the importance of shear calibration data, and difficulties in quantifying the uncertainty of prediction [see Boyce et al. (Int J Fract 186:5–68, 2014) for details of these observations]. This second Sandia Fracture Challenge investigated the ductile rupture of a Ti–6Al–4V sheet under both quasi-static and modest-rate dynamic loading (failure in \(\sim \)0.1 s). Like the previous challenge, the sheet had an unusual arrangement of notches and holes that added geometric complexity and fostered a competition between tensile- and shear-dominated failure modes. The teams were asked to predict the fracture path and quantitative far-field failure metrics such as the peak force and displacement to cause crack initiation. Fourteen teams contributed blind predictions, and the experimental outcomes were quantified in three independent test labs. Additional shortcomings were revealed in this second challenge such as inconsistency in the application of appropriate boundary conditions, need for a thermomechanical treatment of the heat generation in the dynamic loading condition, and further difficulties in model calibration based on limited real-world engineering data. As with the prior challenge, this work not only documents the ‘state-of-the-art’ in computational failure prediction of ductile tearing scenarios, but also provides a detailed dataset for non-blind assessment of alternative methods.

Similar content being viewed by others

1 Introduction

Computational simulations are often called upon to render predictions for a wide range of failure scenarios in mechanical, structural, aerospace, and civil engineering, where full-scale field tests are usually difficult, expensive, and time-consuming. Fracture simulation is deeply rooted in computational solid mechanics that can date back to the 1970s, e.g. Norris et al. (1977). Since that time, there have been ongoing efforts to develop realistic physical models and efficient computational implementation that improve reliability, speed, robustness and above all, accuracy. However, generating predictions that have adequate confidence levels still pose significant difficulties to the simulation community. To elucidate the accuracy of these predictions, it is necessary to evaluate existing models in validation scenarios that approximate the conditions seen in practical applications. For this purpose, Sandia National Laboratories has organized a series of fracture challenges where participants are asked to predict quantities of interest (QoIs) in a given fracture scenario. The participants have never seen the challenge scenario previously, so the predictions are “blind” as is often the case in real engineering predictions. Moreover, while the scenarios are geometrically simple, they present mechanical complexities that are impossible to predict with intuition or simple calculations alone. After the blind predictions are reported, they are subsequently compared against experimental results to determine how closely they replicated the fracture behavior observed in the laboratory. The current paper describes the second Sandia Fracture Challenge (SFC2) issued in 2014 to exercise capabilities in predicting fracture at both quasi-static and modest dynamic loading rates in a Ti–6Al–4V sheet.

The Sandia Fracture Challenge series has three main purposes. Firstly, the Challenge is an assessment of state-of-the-art techniques to predict problems involving ductile fracture accurately. These methods cover many models and approaches pursued by academia and industry to deal with ductile fracture analysis. Secondly, the blind prediction environment offers individual participating teams an environment of “true blindness” to evaluate the strengths and weaknesses of their methodology. This unique setup is a precious opportunity to refine their methods and tools. Thirdly, the Sandia Fracture Challenge has brought together a group of teams that have been actively working in the ductile fracture area for many years. Each team worked independently on the same fracture problem. The collective wisdom obtained by attacking a single specific task with a variety of strategies strengthens our understanding of ductile fracture. This Challenge process facilitates identifying current difficulties, acquiring experience to avoid certain pitfalls in future efforts, and fosters collaboration between universities, national laboratories, and industries around the world. It also contributes to a cumulative learning process.

In 2012, the first Sandia Fracture Challenge (SFC1) was designed to assess the mechanics community’s capability to predict the failure of a ductile structural metal in an unfamiliar test geometry. The results were documented in a special issue of the International Journal of Fracture (Boyce et al. 2014). With no direct funding provided to the participants, thirteen teams with over 50 participants from over 20 different institutions responded to the challenge. The task was to predict the initiation and propagation of a crack in a ductile structural stainless steel (15-5PH) under quasi-static room temperature test conditions. The test geometry was a flat panel that contained a round root pre-cut slot and multiple nearby holes that could influence the notch-tip stress state. The placement of the holes created a competition between a tensile-dominated and shear-dominated failure mode. To calibrate their models, the participants were provided with tensile test data, sharp crack mode-I fracture data, and some limited microstructural information.

The first Sandia Fracture Challenge was designed based on lessons learned in earlier double-blind assessments, where it became obvious that the double-blind evaluation methodology should be governed by some common constraints (Boyce et al. 2011). These common constraints also apply to the second Sandia Fracture Challenge presented here. They include: (1) The sample geometry should be readily manufactured with easily measured geometric features. (2) The manufacturing process should avoid unintentional complications such as significant residual stresses or non-negligible surface damage. (3) The QoIs, such as forces and displacements, should be readily measurable with common instrumentation so that the tests can be repeated in multiple labs in a cost effective manner. (4) The experiment should involve simple, uniaxial loading conditions that are readily tested with common lab-scale load frames and common grips. (5) The sample and loading conditions should avoid unwanted modes of deformation such as buckling. Since the challenge scenario involves a novel test geometry, the repeatability of the behavior may not be apparent until after significant experimental effort. In the present work and similar, prior efforts at Sandia, the experiments were not performed until after the computational challenge had been issued. This approach ensured that all participants, including the experimentalists, were not biased by any prior knowledge of the outcome.

The outcome of the first Challenge motivated this second Challenge, which explores several new facets: (1) in addition to quasi-static loading, the scenario also involves modest dynamic loading spanning three orders of magnitude in strain-rate, (2) the sharp-crack fracture toughness data is replaced with V-notch shear failure data, (3) rather than providing only machining tolerances based on engineering drawings, the participants were provided with actual specimen dimension measurements, (4) the alloy was changed to a titanium alloy with low work hardening behavior. Ti–6Al–4V is a common lightweight, heat treatable, alloy that provides an excellent combination of mechanical properties with high specific strength, corrosion resistance, and weldability, widely used in aircraft, spacecraft, and medical devices.

As with the first challenge, the second challenge provided all participants with experimental data, for model calibration, on the same lot of material used to produce the blind challenge geometries. Two types of material testing were performed and provided to all participants. One is the commonly used simple tension test of a dog-bone coupon. Because of possible anisotropy, the simple tension tests were performed in the rolling (longitudinal) direction and the transverse direction. A shear-dominated test was also performed on a double V-notch plate for the rolling and transverse material directions. These tests may not be sufficient to calibrate all parameters for the material constitutive models used by the participants since some of these models are complicated and can have several dozen parameters. These materials tests were chosen to mimic a real engineering scenario where limited material testing data is available, but should be sufficient to characterize the essential parts of the mechanical response for use in a numerical simulation. These tests are all performed at the two different nominal loading rates that are three orders of magnitude apart and that are the same as the loading rates used for loading the Challenge sample.

Similar to the first Sandia Fracture Challenge, the Second Challenge specimen was also a flat plate with multiple geometrical features that facilitates fracture initiation and growth. Two anti-symmetric slots perpendicular to the loading direction were cut. The roots of these initial slots were rounded to obscure the fracture initiation site. Three holes of different sizes were drilled in the vicinity of the two slots. The spatial arrangement of the holes was chosen to create a competition between tensile and shear-dominated failure, so that the failure path was not simply intuitive. In the Second Sandia Fracture Challenge, the loading rate creates additional complexity. This includes hardening, strain rate dependence and the influence of thermal dissipation of plastic work on deformation. These rate effects were not accounted for in the computational approaches in the first Sandia Fracture Challenge, so these phenomena represented an uncharted area for blind predictions.

As with the first challenge, this second challenge was performed under conditions that are generally commensurate with typical engineering tasks: (a) the outcome is unknown during the simulation process (the prediction is blind), (b) the time for the project is limited, and (c) the available calibration data is limited. After prediction results are submitted, the adopted methodologies are evaluated in their ability to predict macroscopic scalar QoIs such as the peak allowable force before failure and the amount of component deflection at various force levels. The experimental results were only shared with the simulation teams after their blind predictions had been reported. After the blind predictions and experimental outcomes had been disseminated to all participants, a meeting was held at the University of Texas at Austin on March 2–3, 2015 to synthesize commonalities that contributed to accurate predictions or systematic errors. The workshop laid the groundwork for this paper that presents the Challenge and the comparison of the predictions.

This paper is organized as follows: Sect. 2 poses the challenge scenario and describes the experimental testing and results for the material characterization. Section 3 provides details of the geometry, test setups, and the experimental results of the Challenge problem specimen. Section 4 is a synopsis of the models and methods used by the participating teams, while the comparison of the prediction results from each team is given in Sect. 5. This is followed by Sect. 6, with discussions on the results and the strength and weakness of adopted methods. Section 7 provides a brief summary. The detailed contributions of each team’s procedure and their engineering judgment are given in “Appendix 1” and detailed measurements of test sample dimensions are provided in “Appendix 2” for potential future assessment.

2 The Challenge

A meaningful, efficient, and ‘fair’ Challenge should be governed by a set of common constraints (Boyce et al. 2011, 2014). First, this ‘toy problem’ or ‘puzzle’ should have no obvious or closed-form solution. It should be sufficiently distinct from other standard or known test geometries so that the outcome of the exercise is unknown to the participants. The scenario should be readily confirmed through experiments. It may be desirable for the challenge scenario to result in a single unambiguous repeatable experimental outcome, or as was the case for the first Sandia Fracture Challenge, the scenario could be near a juncture of two competing outcomes. Since the challenge scenario involves a novel test geometry, the repeatability of the behavior may not be apparent until after significant experimental effort. In the present work and similar, prior efforts at Sandia, the experiments were not performed until after the computational challenge had been issued. This approach ensured that all participants (including the experimentalists) were not biased by any prior knowledge of the outcome.

2.1 The 2014 Sandia Fracture Challenge Scenario

The fracture challenge was advertised and issued to potentially interested parties through a mechanics weblog site, imechanica.org, and through an e-mail solicitation to many known researchers in the fracture community. The fracture challenge was issued on May 30, 2014 and final predictions from participants were due on November 1, 2014, approximately five months after the issuance of the challenge. The initial packet of information contained material processing and tensile test data on mechanical properties, the test specimen geometry, the loading conditions, and instructions on how to report the predictions. Supplemental shear test data was released on August 13, 2014. The degree of detail provided was intended to be commensurate with the information that is typically available in real engineering scenarios in industry. These details regarding the material, test geometry/loading conditions, and QoIs are described in the following three subsections.

2.1.1 Material

The alloy of interest was a commercial sheet stock of mill-annealed Ti–6Al–4V. This alloy was chosen because it is a commonly used alloy in aerospace applications—it is moderately rate sensitive and its very shallow work hardening rate can be challenging for computational models. All test specimens were extracted from a single plate purchased from RTI International Metals, Inc. with a specified thickness of \(3.124\pm 0.050\) mm. The original material certification was provided to the participants, and included the following chemical analysis (in wt%): C 0.010, N 0.004, Fe 0.19, Al 6.02, V 3.94, O 0.16, and Ti 89.676 (Y was also present with less than 50 ppm). The sheet was annealed by the mill first at \(381.3\,^{\circ }\hbox {C}\) (\(718.3\,^{\circ }\hbox {F}\)) for 20 min and then at \(419.9\,^{\circ }\hbox {C}\) (\(787.8\,^{\circ }\hbox {F}\)) for 15 min.

Rockwell C hardness measurements were performed on the Ti–6Al–4V plate. The average of 6 measurements was 36.1 HRC (Rockwell C) which is consistent with mill-annealed Ti–6Al–4V.

2.1.2 Tensile calibration tests

Eighteen tensile coupons were tested, in two orientations and at two loading rates, fast (25.4 mm/s) and slow (0.0254 mm/s), in the Structural Mechanics Laboratory at Sandia National Laboratories. Eight tensile tests were oriented along the rolling direction, with three at the fast rate and five at the slow rate, and ten were oriented along the transverse direction, with five at the fast rate and five at the slow rate. All tests were conducted according to ASTM E8/E8M-13 using the nominal geometry shown in Fig. 1. The as-measured thickness and width dimensions of all eighteen tensile coupons were provided to the participants. The tensile specimen tests were conducted using a uniaxial MTS servo-hydraulic load frame with a 100-kN load cell (\(\pm 1\,\%\) uncertainty of the measured value) at ambient temperature, controlled by an MTS FlexTest Controller. The actuator has an internal calibrated linear variable displacement transformer (LVDT) (\({\pm }0.5\,\%\) uncertainty of the measured value) that measured the actuator stroke displacement, which was the control variable. The specimens were gripped using standard manual wedge grips. The velocity of the fast-rate test as measured by the actuator LVDT in the tension tests was also confirmed using digital image correlation to track fiducial markings on the grips imaged by a high-speed Phantom 611 camera. Strain was measured using an extensometer with a 25.4 mm gage length. Engineering stress–strain curves were provided, as well as the raw force-displacement data for each tensile test. Figure 2 shows the resulting engineering stress–strain curves for all eighteen tests. The yield strength, ultimate strength, and elongation values are presented in Table 1.

Images of fracture morphology for the tensile specimens with different loading rates and material orientations: a–d are optical images from a Keyence microscope and e–f are SEM images. a RD4—25.4 mm/s loading rate. b TD11–25.4 mm/s loading rate. c RD7—0.0254 mm/s loading rate. d TD4—0.0254 mm/s loading rate. e RD8—0.0254 mm/s loading rate. f RD9—25.4 mm/s loading rate

Images of the geometry of the necking for the tensile specimens with different loading rates and material orientations. Note: The grid lines in each image have a spacing of 5.08 mm, and each sample had a grip vertical height of 12.6 mm. a RD7—0.0254 mm/s loading rate. b TD4—0.0254 mm/s loading rate. c RD4—25.4 mm/s loading rate. d TD12—25.4 mm/s loading rate

Specimen geometry for shear tests. Dimensions are in millimeters. The average plate thickness for these specimens was 3.100 mm. VA specimens have the sheet rolling direction parallel to the 55.88 mm length, and the rolling direction in VP specimens is perpendicular to the rolling direction in VA specimens

The test data indicates that there is a dependence of the tensile behavior on the loading rate and to a lesser extent on loading direction. Optical and electron microscope images of the fracture surface morphology (Fig. 3) as well as side-view macro images of the necking profile and fracture plane (Fig. 4) were also provided to the participants.

2.1.3 Shear calibration tests

Eight shear specimens were tested in the Structural Mechanics Laboratory in the same load frame as the tensile samples. Four were loaded in shear in the rolling direction (denoted as VA for the remainder of this section), with two at the fast loading rate and two at the slow loading rate. Four were loaded in shear transverse to the rolling direction (denoted as VP for the remainder of this section), with two at the fast loading rate and two at the slow loading rate. The geometry of the shear specimens were based on ASTM D7078/D7078M-05 with the V-notched rail shear geometry modified (deeper notch than the standard) to reduce the stress area by more than half and allow induced failure at lower forces to minimize grip rotation and minimize grip slippage. The test specimen geometry for the shear test is shown in Fig. 5. The as-measured dimension of the shear specimens were provided to the participants.

As shown in Fig. 6, stacked rosette strain gages, with a gauge length of 3.18 mm, were affixed to the center of the V-notch shear test specimens and to the left (relative to the front face) of the center. The rosette strain gages to the left of the front face were stacked for four of the test specimens and unstacked for the remaining three test specimens. The rosette gages on the front were paired with gages on the back. The elastic shear modulus was calculated using the central stacked strain gage rosette measurements for the shear strain and an assumption that the shear stress was the measured load divided by the V-notch gage area. The elastic shear modulus from these calculations was 44 GPa, which is consistent with literature values for Ti–6Al–4V.

The shear test fixture was a commercial off the shelf “Adjustable Combined Loading Shear (CLS) Fixture” from Wyoming Test Fixtures. In this fixture, each test specimen was held in place by a grip on each side. Each grip had two face grip inserts pressed against the front and back face of the test specimen and one horizontal insert. The specimen was held in place by tightening 5/8-18 UNF stainless bolts on the face grip inserts to 67.8 N m torque and hand tightening 1/2-20 UNF stainless bolts to secure the horizontal insert for each grip. The grip fixtures were made of 17-4PH stainless steel and were rigidly attached to the load frame. Figure 7 shows a close up view of the shear test setup, including the grip inserts on the fixed side. An axial LVDT mounted in the back of the fixture between the two grip halves provided the overall displacement measurement and the load cell provided the load measurement.

Two issues arose when performing the shear tests. The first was that the shear specimen slipped within the grips. The second was that the fixture itself had a non-negligible compliance. Additional tests (including tests on non-notched rectangular specimens to quantify the fixture compliance and cyclic load tests to quantify the slip) were performed, and those detailed measurements were provided to the participants. As discussed later, most participants made use of the axial LVDT displacement vs. load data provided either in the direct form as shown in Fig. 8, or with a slip correction that was provided. The non-ideal behavior of this shear test method highlights the need to develop better shear testing standards for metal failure characterization.

Figure 9 shows the post-test failure surfaces of selected shear specimens. As seen from the figure, the failure surfaces are at a slight angle with respect to the loading direction. The VA specimens showed a larger angle of the failure surface than the VP specimens. Additionally, the VA slow specimens visually showed a rougher failure surface than the other specimens.

2.1.4 Fracture challenge geometry and loading condition

The Challenge geometry consisted of an S-shaped sheet specimen with two notches and three holes, as shown in Fig. 10 with detailed dimensions. The notch locations were labeled “A” and “B”, in Fig. 11, and consist of a 6.35 mm wide notch of overall length 28.575 mm. The size of the three holes for “C”, “D”, and “E” were nominally 3.175, 3.988, and 6.35 mm, respectively. In addition, ‘knife-edge’ features were added to each notch edge for the purpose of mounting Crack Opening Displacement (COD) gages. Three holes were introduced into the S-shaped geometry to provide multiple competing crack initiation sites and propagation paths at each notch location.

Pin holes were machined away from each notch tip for the insertion of an 18-mm diameter loading pin. These pin holes provided for standard clevis grip loading in a hydraulic uniaxial load frame. Clevis grips conforming to ASTM E 399 were used; these grips have D-shaped holes with flats to provide a rolling contact that minimizes friction effects. It is important to note that each computational team and experimental testing lab was provided no additional constraints regarding the decision of how to apply boundary conditions. This limited definition of the boundary conditions bears similarity to real world engineering problems, where the detailed boundary conditions are rarely well defined. This limited definition of the boundary conditions for the Challenge geometry would prove to be a source of discrepancy among the participants.

The participants were instructed that the Fracture Challenge would evaluate two different displacement rates of 25.4 and 0.0254 mm/s, spanning 3 orders of magnitude in strain-rate. Any undeclared aspects of loading that were salient to the outcome were considered as sources of potential uncertainty.

2.1.5 Quantities of interest

A set of quantitative questions were posed to the participants to facilitate comparing the analyses to the experimental results. These questions were meant to evaluate the robustness of the analysis technique in predicting deformation, necking conditions, crack initiation, and crack propagation. All challenge participants were requested to provide the QoIs embedded in the following six questions to facilitate quantitative evaluation and analysis of the blind predictions:

For each of the two loading rates, please predict the following outcomes:

-

Question 1: Report the force at the following Crack-Opening-Displacements (COD):

-

COD1 \(=\) 1-, 2-, and 3-mm.

-

-

Question 2: Report the peak force of the test.

-

Question 3: Report the COD1 and COD2 values after peak force when the force has dropped by 10 % (to 90 % of the peak value).

-

Question 4: Report the COD1 and COD2 values after peak force when the force has dropped by 70 % (to 30 % of the peak value).

-

Question 5: Report the crack path (use the feature labels in Fig. 11 to report crack path).

-

Question 6: Report the expected force-COD1 and force-COD2 curves as two separate ASCII data files.

A COD gage was used to monitor load-line displacement at the point of the ‘knife-edge’ features, akin to fracture toughness testing. Figure 11 shows the locations of COD1 and COD2. The COD measurement was defined for the participants in the following way: “COD1 and COD2 \(= 0\) at the start of the test; the COD values refer to the change in length from the beginning of the test”. All participants were also asked to report their entire predicted force-COD displacement curve. These force-COD curves provided further insight into the efficacy of the methods.

3 Experimental method and results

The behavior of the challenge specimens were evaluated by three different experimental teams. The primary results are those from the Sandia Structural Mechanics Laboratory, with additional experiments performed at the Sandia Material Mechanics Laboratory and the laboratory of Prof. Ravi-Chandar at the University of Texas at Austin. All three laboratories tested specimens that had been fabricated at the same time from the same plate of material. All three sets of experimental data were generally consistent with one another with regard to the force-displacement behavior and observed failure path. The primary differences in the tests from each laboratory can be summarized as follows: the Sandia Structural Mechanics Lab performed in situ imaging for both loading rates, the Sandia Material Mechanics Lab provided independent confirmation tests with additional local thermal measurements in the high-deformation zones, and the University of Texas Lab performed optical measurements to capture local and global kinematic fields for the slow loading rate. The following subsections cover the pretest characterization of the challenge specimens, the details of the experimental methods used in each laboratory to test them, and post-test characterization of the specimens.

3.1 Observations from the Sandia Structural Mechanics Laboratory

3.1.1 Test setup and methodology

Pre-test geometry measurements

All of the challenge specimens were fabricated by the same machine shop, where the specimens were inspected and measured to ensure compliance with the fabrication drawing. Detailed specimen dimensions were provided to the participants and are tabulated in “Appendix 2”.

Test setup in structural mechanics laboratory

In the Structural Mechanics Lab, eight specimens were tested at a loading rate of 0.0254 mm/s, and seven specimens were tested at a loading rate of 25.4 mm/s. The test setup is shown in Fig. 12. These tests were conducted in displacement control using the same uniaxial MTS servo-hydraulic load frame and 100-kN load cell as was used for the tensile and shear tests. The actuator LVDT was the control variable. Clevis grips from Materials Testing Technology (model number ASTM.E0399.08) were made of 17-4PH stainless steel with a pin diameter of 17.93 mm. These clevis grips conformed to the ASTM E 399 testing standard, as prescribed by the Challenge. Each grip was securely mounted in the load frame using spiral washers to allow for individual rotational alignment of each clevis grip relative to the uniaxial load-train. Precision-cut spacers were used on either side of the sample at each clevis to center the sample in each clevis. Prior to testing, the clevises were aligned using a strain gaged dummy sample, loaded in its elastic regime. The relative displacement between the two clevis grips was measured during each test using an LVDT mounted on each side of the grips. The clevis grip LVDTs were calibrated at the time of use with a Boeckeler Digital Micrometer, with \(\pm 0.508\,\upmu \hbox {m}\) resolution and repeatability within \(\pm 0.508\,\upmu \hbox {m}\). Two COD gages from Epsilon Tech Corp. (SNs E93896, S93897) were used to measure the opening of the two notches in the challenge specimens. The Epsilon COD gages were calibrated at the time of use with a Starret Micrometer, with \(\pm 0.508\,\upmu \hbox {m}\) resolution and repeatability within \(\pm 2.54\,\upmu \hbox {m}\).

In the fast loading rate tests, a vibrational response was induced in the COD gages, thus causing oscillations in the recorded output. The frequency of the oscillation was approximately 440 Hz. The frequency of the output signal matched what was recorded in the tests. To remove the oscillations, the COD data from all fast-rate tests were filtered using a low-pass, second-order Butterworth filter with cutoff frequency of 220 Hz.

A sequence of images of the deforming samples was collected for both the slow and fast tests. The slow-rate test imaging was controlled by Correlated Solutions VicSnap software with NI-DAQ synced data collection from the MTS FlexTest Controller. The imaging setup included two Point Grey Research 5MP Grasshopper monochromatic CCDs (\(2448 \times 2048\) pixels) with 50-mm Schneider lenses, viewing the front and back surfaces of the samples, with a frame rate of 2 fps. The fast-rate test imaging included a Phantom V7 high-speed camera \((800 \times 600\) pixels) with a 24–85 mm lens, viewing the front of the samples, and controlled by Phantom camera software based on a trigger sent by the MTS FlexTest controller at the start of the test, with a frame rate of 3200 fps and a 0.3-ms exposure. Black and white fiducial markings applied to the sample close to the COD gage knife edges enabled a secondary COD measurement via a digital image correlation (DIC) algorithm (Chu et al. 1985) based on a custom image tracking script in a software package called ImageJ (Schneider et al. 2012). Since the markings were not precisely at the COD knife edges, these were not used to determine the COD measurements that served as the basis for the Challenge questions. In hindsight, these markings were useful for capturing the COD measurement after the Epsilon COD gages had fallen off the samples at the onset of unstable crack growth. The displacements from the COD gages and the DIC tracking of the markings will be compared in the next section.

3.1.2 Test results and observations

Load versus COD profiles

Seven of the eight samples tested at 0.0254 mm/s and all seven of the samples tested at 25.4 mm/s in the Structural Mechanics Laboratory failed along the B–D–E–A path defined in Fig. 11, while Sample 30 tested at 0.0254 mm/s failed along the A–C–F path. Figure 13 contains the load-COD1 profiles for all of the samples. For the samples that failed via the B–D–E–A path, the COD1 gage fell off the sample at the onset of unstable crack growth, so the load-COD1 profiles are all truncated to the crack initiation point. The load-COD1 profile of Sample 30 has been truncated to when the crack first initiated (in the A–C ligament). At each loading rate, the load-COD profiles for the B–D–E–A samples show that these samples have repeatable behavior through peak load, with variation in the COD1 value at crack initiation. In comparing the responses from the two loading rates, the faster loading rate led to higher peak loads, smaller COD1 values at unstable crack initiation, and less spread in COD1 values at unstable crack initiation.

The failures of the B–D and D–E ligaments occurred nearly simultaneously and were indistinguishable in the load-displacement data. The sequential still images collected during testing did not provide sufficient temporal resolution to clearly identify which ligament failed first. The high speed camera used during the high rate testing lacked the needed spatial resolution to definitively identify the first ligament failure. Later post-challenge observations at the University of Texas indicated that ligament D–E likely failed first for the slower rate load case (Gross and Ravi-Chandar 2016), as described in detail in “Post-Challenge experimental observations from the Ravi-Chandar Lab at University of Texas at Austin” section of “Appendix 2”. The load-COD1 profile of Sample 30 falls in line with all the other samples up to failure, with the A–C ligament failing at least 0.5 mm earlier than any B–D–E failure in the other samples. Figure 14 compares the load-displacement profiles for Samples 11 and 30. The load-COD1 profiles are remarkably similar up to the A–C failure of Sample 30, but the load-COD2 profiles deviate earlier at around 1.4 mm, well before peak load. The load-clevis LVDT behavior is nearly identical up to the crack initiation in notch A of Sample 30. The early failure of Sample 30 is thought to be an outlier based on fractography, as will be discussed later in Sects. 3.1 and 3.3.

Figures 15 and 16 show the load-COD measurements for both COD gages with six associated in situ images for Samples 11 and 27, representing typical sample behavior for each displacement rate. The force-COD1 data was used extensively for evaluation of predictions in Sect. 5, and the COD2 curves and corresponding images are included here for completeness. For Sample 11 at the 0.0254 mm/s displacement rate, the COD1 gage, tracking the opening of notch B where a crack formed, lags behind COD2 for images 1 and 2, catching up by image 3, and then increasing more rapidly until failure. Alternatively, for Sample 27 at the 25.4 mm/s displacement rate, the COD1 gage lagged for most of the high-loading regime, except immediately before failure. For both loading rates, considerable strain localization is evident in the B–D, D–E and A–C ligaments as the tests progressed. Videos of the tests for Samples 11 and 27 are available in the Supplementary Information.

After the conclusion of the challenge, Team E performed follow-up DIC analysis of the fiducial marking motion for Samples 11 and 27 using their custom feature tracking algorithm in ImageJ. The core purpose of this analysis was to evaluate the change in COD with force after unstable fracture when the clip gage extensometers detached. The fiducial marking displacements were slightly less than the COD gages since the fiducial markings were inboard of the knife edges in each notch. Figure 17 includes the load versus COD1/COD1-DIC (the fiducial marking displacements across the notch with the COD1 gage) curves for Samples 11 and 27. COD1-DIC measurements are similar in profile to the COD1 measurements. For Sample 27, the frame rate was fast enough to capture a few measurements during the load drop; the load versus COD1-DIC curve at the first failure shows that the load immediately drops with very little change in COD1. This near-vertical unloading associated with unstable cracking is used later to compare to model predictions.

3.2 Confirmation observations from the Sandia Material Mechanics Laboratory

The Sandia Material Mechanics Laboratory provided four tests to confirm the results independently from the Sandia Structural Mechanics Laboratory: three at the slow displacement rate (Samples 13, 23, and 29) and one at the fast displacement rate (Sample 20). The tests were performed on a MTS 311.21 four-post load frame under LVDT displacement control using a FlexTest 40 digital controller. The LVDT had a range of \(\pm 100\) mm (SN 645LS) with a maximum calibrated error of \(\pm 0.35\,\%\) across the entire range. The Interface model 1020AF load cell (SN 3069) had a capacity of 55,600 N with a maximum calibrated error of \(\pm 0.45\,\%\) in tension. The same COD gages were used by both labs, although the gages were independently calibrated at the time of testing in the Material Mechanics Laboratory with a MTS Calibrator model 650.03 with a maximum calibration error of \(\pm 1\,\%\). The clevis grips were the same model used by the Sandia Structural Mechanics Laboratory. Angular and concentric misalignments between the two grips were compensated using a MTS model 609 alignment fixture. Three-dimensional (3-D) printed plastic spacers ensured that the test coupon was located in the center of the wide clevis grips. Type K thermocouples with 0.25 mm wide junction tips were spot welded in the center of the B–D ligament (Position 1) and in the A–C ligament (Position 2) to monitor the thermal history during the tests for Sample 20 and 29.

All four samples tested in the Material Mechanics Laboratory failed along the B–D–E–A path. The load-COD1 profiles for these samples are plotted with the data from the Structural Mechanics Laboratory in Fig. 18. Good agreement between the data demonstrates repeatability of the experimental results at two independent labs.

Thermal histories of ligaments B–D and A–C: a Samples 29 at the slow displacement rate; and b Sample 29 at the fast displacement rate. Note that scales for the axes are not the same. The thermal rise in the fast test is delayed after the mechanical event presumably due to the rate of thermal conduction to transfer to the thermocouple

Figure 19 contains the thermal histories of ligaments B–D (Position 1) and A–C (Position 2) of Samples 20 and 29 during the tests measured via the thermocouples installed on the test samples. Generally, the temperature rise on the surface in the B–D ligament during the slow rate test was less than \(10\;^{\circ }\hbox {C}\), whereas the temperature rise during the fast rate test was greater than \(45\,^{\circ }\hbox {C}\). For both samples, the temperature rise in the B–D ligament was greater than that in the A–C ligament. These thermal measurements are only approximate values; there was no diagnosing the thermal resolution of the thermocouple readout. The thermocouple size was 1-mm; the position may not have been precisely at the midpoint in the ligament between the notch and hole. The instantaneous spikes at the end of the slow rate data and during the deformation process in the fast rate test are artifacts likely caused by the motion of the thermocouple wire and not representative of the actual temperature. Beyond these details, it is important to see the major trends of greater temperature rise in the fast rate test and for the B–D ligament where the samples failed.

3.3 Collation of experimental values for the challenge assessment based on both Sandia Laboratories’ testing

Prior to collating the experimental values for the QoIs of the Challenge, two aspects of the experimental results require additional discussion. The first aspect is that one of the specimens followed the path A–C–F, while all the rest of the specimens followed the path B–D–E. While it may be tempting to consider the one experiment indicating path A–C–F to be an outlier, and not representative of the response of the specimen, any such decision must be based on proper evaluation of the underlying reasons for the observed response. We recall that in the 2012 Challenge, one specimen exhibited a different path from all the rest, but a careful analysis indicated that this response was the nominal response to the challenge problem, while the other dominant response observed in the experiments was due to systematic deviations in the specimen geometry. In the present Challenge, Sample 30 exhibited path A–C–F, while all others followed the path B–D–E–A; geometrical measurements identified this specimen to have a greater out-of-plane warpage than all other specimens. However, no causal link has been established to connect the lack of flatness to the observed failure. Fractographic evaluation of the failed surface A–C of Sample 30 indicated that the failure was not consistent with typical tensile failures observed previously (see section “Fractographic observations” of Appendix 2). Based on these factors, it is considered that the failure along A–C is not the expected or nominal response of the specimen and the correct failure path identified from the experiments is along path B–D–E–A. The second issue is that since the COD gages fell off the samples at the initiation of unstable crack growth at a force greater than 90 % of the peak load, two of the QoIs posed in the original challenge—the COD1 and COD2 values when the load had dropped by 10 and 70 % of the peak load—were not directly measured; only the questions related to the force at COD1 of 1, 2, and 3 mm, the peak load and the crack path could be used for comparison to the computational predictions. Additional QoIs, not listed in the original Challenge that help characterize the failure, become necessary in order to compare the experiments and simulations. Such additional QoIs must be identified carefully so as to maintain the original intent of the challenge. While there are many options for this selection, it is clear that the experimental results themselves point to key features of the response that should be predictable: all experiments indicated unstable crack initiation and growth through the ligaments B-E and D-E. Therefore, three additional QoIs are chosen from the experiments: the binary quantity on the nature of crack initiation (stable/unstable), and the force and COD1 values at the initiation of unstable crack growth. The specific values of the QoIs obtained from the challenge experiments are summarized in Tables 2 and 3, including the force and COD1 values when unstable crack propagation began. As can be seen from the results, the forces obtained at the different COD levels, at the peak and at onset of unstable fracture, do not exhibit a large scatter: about \(\pm 3.2\,\%\) of the average for the slow-rate tests and \(\pm 1.8\,\%\) of the average for the fast-rate tests. In contrast, the COD value at onset of unstable crack propagation had significantly greater variation, with the range being \(\pm 19\,\%\) of the average for the slow-rate tests and \(\pm 5.4\,\%\) of the average for the fast-rate tests. This scatter in the load and COD values is intrinsic and points to an important issue in understanding the nature of the problem and generating models: fracture is a much more stochastic process than deformation.

4 Brief synopsis of modeling approach

In this section, a brief synopsis that compares and contrasts the varied approaches employed for prediction is provided. Each approach illustrated in subsequent appendices has been partitioned into methods and models in this section. The methods component highlights the character and solution of the partial differential equations while the modeling component focuses on the constitutive models requisite for localization, crack initiation, and unstable propagation. The selected partition and array of preferred terminology only seeks to be helpful. One must be careful to note that disparate solutions can stem from the application of the same methods and model.

The finite element method was employed by all teams except Team K who instead employed the Reproducing Kernel Particle Method (RKPM). Although most teams employed dynamics to characterize the unstable process, some did adopt a quasi-static approach that neglected inertial effects. Both implicit and explicit schemes for time integration were used. These characteristics are grouped into Solver in Table 4. Higher rates of loading can lead to temperature effects which can be modeled through the inclusion of energy balance. The Coupling label differentiates between the inclusion of thermo-mechanical coupling (segregated) and approaches that employ variations of the adiabatic assumption. Teams that did not utilize a coupled thermo-mechanical model attempted to capture the effects of temperature through the material fitting process. The application of the loading is categorized as Boundary conditions. An important boundary condition in the model is the method used to define the pin loading. Methods included specimen-pin contact, a contiguously meshed pin, and the direct application of essential boundary conditions to the surface of the specimen. For approaches that employ contact, the pin is specified as deformable or rigid and the specimen-pin interface is classified as having friction or being frictionless. In Table 4, a label describing the frictionless contact of a rigid pin would be contact, frictionless, rigid. Less rigorous approaches avoid the complexity of contact through a contiguously meshed pin. This assumption can also employ a deformable or rigid pin. In an attempt to approximate specimen rotation relative to the pin, the contiguously meshed pin can both translate and rotate (about its centerline). The label rotating communicates that the contiguously meshed pin is both rotating and translating while the label fixed implies a contiguously meshed pin that can translate but not rotate. Thus, the label contiguous, rotating, deformable would highlight a contiguously meshed, deformable pin that can rotate about its centerline. The last simplification involves the direct application of displacements to surface nodes. Although this approach can accommodate translation, the direct application of displacements on a patch of nodes will constrain specimen rotation and is thus labeled surface nodes, fixed.

In addition to specifying the balance laws and boundary conditions, the element type, discretization, and numerical approach for crack initiation and propagation is highlighted. Regarding element technology, approaches that employ full integration and reduced integration via Element type are distinguished. In this brief summary, fully integrated refers to the deviatoric response. The pressure is almost always on a lower-order basis to avoid volumetric locking. Reduced integration implies element formulations that seek to reduce computational time through a single integration point and require hourglass stiffness and/or viscosity to suppress zero-energy modes (Reese 2005). The level of refinement is noted in Discretization. The in-plane element size, through-thickness element size, and degrees of freedom are labeled IP, TT, and DOF, respectively. If a single element was employed in the through-thickness direction, 2D is appended. The method employed for free surface creation is labeled Fracture methodology. The most common methodology employed was element deletion. Elements may be loaded and unloaded (via damage evolution) prior to deletion. Teams employing criteria (of loaded elements) may have also employed a crack-band model for unloading. Crack band models attempt to maintain a common measure of surface energy (Bazant and Pijaudier-Cabot 1988). Teams also employed surface element approaches which regularize the effective surface energy through a characteristic length scale. Those paths were seeded or adaptively simulated through X-FEM (Dolbow and Belytschko 1999). The fracture process originates from smooth notch having a bounded stress concentration. After crack initiation, the process is over-driven. This particular problem may exhibit less sensitivity to non-regularized methods (de Borst 2004) because solutions will be less sensitive to the modeled fracture resistance. The final category, Uncertainty, summarizes each team’s effort to characterize uncertainty in the methods and/or models. For this effort, all teams employed bounds to characterize uncertainty. Those bounds are partitioned into geometry, boundary conditions, and material parameters.

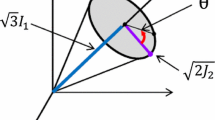

The solution of the balance laws requires the specification of a constitutive model. Both the literature and the provided data characterize Ti–6Al–4V sheet as anisotropic and having rate and temperature dependence for the applicable loading rates. The flow characteristics of the models used by the different teams are noted under Plasticity in Table 5. The yield function and the hardening, labeled Yield function and Hardening, respectively, may have rate and temperature dependence. Table 5 contrasts yield functions which employ a single invariant (von Mises, \(J_{2})\), or multiple invariants \((J_{2}/J_{3})\) with anisotropic yield surfaces [Hill (Hill 1948), Cazacu–Plunkett–Barlat (Cazacu et al. 2006)]. The labels Hill and CPB06 represent the Hill and Cazacu–Plunkett–Barlat yield surfaces respectively. The hardening is characterized through a functional form. There is no distinguishing between approaches that employ only the equivalent plastic strain or additional internal state variables for hardening. Models for fracture/failure are partitioned into Criteria and Damage evolution. Criteria refers to the failure metric for a material point. The post-failure response may be abrupt or be governed by an additional law for unloading (such as the crack-band model). Damage evolution attempts to communicate if models include the micromechanics of the failure process through a softening response termed Damage accumulation. That response may depend on the evolution of an additional internal state variable (such as void volume fraction) or a post-processed quantity. Continuum damage mechanics approaches are contrasted with methods that lump damage evolution into an effective traction-displacement law. The Calibration label in Table 5 communicates if the team employed the provided tension and shear data for model calibration.

5 Comparison of predictions and experiments

5.1 Comparison of scalar quantities of interest and crack path

In real-world engineering scenarios, modeling is often used to predict scalar performance metrics such as the maximum allowable service load that a component can support or how far the component can be deformed before it will fail. Motivated by this, the challenge scenario specified certain scalar QoIs to be reported, as previously described in Sect. 2. Table 6 compares the scalar QoI predictions of all 14 teams to the experimentally measured range. In this table, several key QoIs are tabulated: the applied load when COD1 was equal to 1 and 2 mm, the peak applied load, and the crack path for both the slow and fast loading rates. In the original challenge, the teams were asked to predict the COD1 value when the load had dropped by 10 and 70 % below peak load. However, as discussed earlier, these QoI’s were not experimentally measurable quantities due to the dynamic nature of the unstable crack initiation and growth. This was the reason for the formulation of alternate QoIs described in Sect. 3.2. Therefore, Table 6 includes two additional columns for these additional QoIs: COD1 and force values at the point of unstable crack initiation. These QoIs were readily extracted from the force-COD curves reported by each team. A sudden drop in the load at fixed COD1 level was taken as indication of prediction of unstable fracture; the force and COD1 corresponding to this transition was taken to be the prediction of failure load and COD1. Some teams predicted a gradual drop in the load, with either an increasing or decreasing COD1 level, indicative of stable fracture. In these cases, it was not possible to evaluate the alternative QoI.

The expected value column for the scalar QoI metrics reported in Table 6 are color coded to indicate if the predicted values were consistent with experimental observation. Black values were within the range of experimental scatter, with an additional buffer of \(\pm 10\,\%\) added to the experimental range. This somewhat arbitrary buffer is intended to allow for the possibility of ‘valid’ scenarios outside of the limited number of experimental observations. Numbers reported in the expected value column that are color coded red or blue indicate predictions that are high or low, respectively, compared to the buffered experimental range.

Figures 20 and 21 provide a graphical representation of the tabulated data from Table 6, comparing each team’s predictions (points) to the upper and lower bounds for the experimental range (horizontal dashed lines). Figure 20 assesses the QoI metrics for early deformation up to the point of necking whereas Fig. 21 assesses the QoI metrics for the first unstable crack event. In addition to the numerical data, an indication is made if the team did not predict the B–D–E–A crack path, or if the team predicted a stable crack growth process (e.g. slowly evolving damage) rather than an unstable crack event. Four teams predicted crack paths that deviated from the B–D–E–A. One team (Team N) predicted a failure path for the slow rate test of A–C–F, and for the high rate test of B–D–E–A. Two other teams predicted an A–C–F failure path for both the slow and fast rate tests (Team C and G). One team (Team F) predicted a mixed failure path (for the slow rate test, A–C failure, followed by partial failure along the B–D–E path, and then finally through the remaining ligament, C–F; and for the high rate test B-D-E failure, followed by failure along the A–C ligament, and then finally failure through the remaining E–A ligament).

5.2 Comparison of force-COD curves

Force-COD1 curves can provide additional insights into the efficacy of the various modeling approaches. Figure 22 provides a comparison between the experimentally measured force-COD1 curves and those predicted by the teams. While this figure provides an overview of the extent of prediction scatter, it is difficult to assess any specific team’s direct comparison to experimental observations. For that purpose, Figs. 23 and 24 provide a team-by-team comparison of the same force-COD1 curves for the slow and fast loading rates, respectively. A more detailed discussion comparing the predictions to the experimentally measured values is contained in Sect. 6.

6 Discussion

The goal of the present study was to assess the predictive capability of modeling and simulation methodology when applied to a model engineering problem of ductile deformation and fracture. The success of the predictive tools relies on five successful elements: (1) realistic physical constitutive models for deformation and failure, (2) accurate calibration of model parameters based on available data, (3) proper numerical implementation in a simulation code, (4) representative boundary conditions, and (5) correct post-processing to extract desired quantities. The chosen problem explored several phenomenological aspects of material behavior such as elasticity, anisotropic plasticity with coupled heat generation, localization, damage initiation, coalescence, crack propagation, and final failure. Furthermore, the damage and failure process considered a competition between a tensile mode failure process and a shear mode failure process. The challenge was open to the public and agnostic with regard to the computational methods employed. The challenge merely postulated a specific set of QoI’s to be predicted based on the provided geometry, material properties, and loading conditions. This report combined with the outcome of the previous challenge provide an assessment of the state-of-the art in failure modelling and areas for improvement.

6.1 Assessing agreement and discrepancy between predictions and experiments

6.1.1 Generic categorization of potential sources of discrepancy

In the context of the present challenge, multiple repetitions of data were provided on the material calibration tests to enable treatment of material property uncertainty. The as-machined specimen dimensions of the challenge geometry were also provided to enable bounding based on dimensional variability. No guidance was provided on how to use this uncertainty information and different teams chose differing approaches depending on engineering judgement and available time/resources. Most teams only utilized what was considered as the most representative features of both the material response and the geometry in calibration and simulation. Only one team, Team M, reported that they had intentionally explored geometric variations across the range of reported specimen dimensions to help bound their blind predictions. The representation of the compliant boundary condition for the shear calibration test and the pin-loaded contact boundary condition for the challenge geometry were addressed differently by each team, resulting in different responses, even in the elastic regime. Some teams accounted for anisotropy of the plastic response of the material through the use of the Hill48 or equivalent models, whereas other teams considered only the isotropic yielding model. The decision was based on engineering judgment and/or model availability. Similarly, some teams ignored the heat generation during high-rate deformation while others chose adiabatic or coupled-thermomechanical conditions. Some teams incorporated strain-based damage induced softening of the plastic response, while others used a strain-to-failure criterion based on a damage indicator function, in the spirit of the Johnson-Cook model. This lack of consensus on the modeling assumptions and techniques, as compared in Tables 4 and 5, results in significant heterogeneities in the predictions. Nevertheless, overall predictions were more consistent and in line with the experimental results than in previous challenges. The general improvement in predictions is likely due, at least in part, from learning and model improvement as a result of prior challenges.

Elastic stiffness Perhaps one of the most surprising outcomes of the challenge was that several teams predicted a stiffness in the elastic regime that deviated \(>\)10 % beyond the experimental slope. When predictions were in error, they were all consistently too stiff relative to the experimental stiffness. For most if not all of the teams in error, the stiffness was overestimated due to an unrealistic representation of the pin loading boundary condition (e.g. no free rotation at the pin). A comparison of the results for all of the teams suggests that if similar, realistic pin-loading boundary conditions had been adopted by the teams all of the results would be more consistent with the experimental elastic slope. It is also interesting to speculate that the elastic slopes were better modelled in the previous challenge because data from a compact tension specimen with pin loading was provided to calibrate models for the blind prediction.

Yield surface The yield behavior and all post-yield deformation/failure processes can depend on extrinsic factors of the environment and loading conditions, including strain-rate, temperature, triaxiality, Lode angle, and load-path history. In addition, yield behavior can depend on intrinsic aspects of the material itself such as grain size, grain shape, crystal structure, phase distribution, etc. Rarely are all of these extrinsic and intrinsic factors described in a single plasticity model, due in part to the limited calibration data that is available, and also to uncertainty on the proper mathematical descriptions. In the absence of a comprehensive, universally-accepted plasticity law, and all the necessary data to calibrate its parameters, each team chooses to represent some subset of the dependencies. Two characteristics of the challenge material response that were included by some teams in their constitutive model description of the material are the dependence of the yield locus on Lode angle and material anisotropy. Wrought titanium alloys are known to display plastic anisotropy. The test data provided, be it the uniaxial tension test data or the shear test data, indicated some anisotropy in the material’s response. Comparison of the tension test data against the shear test data also indicated dependence of the yield surface on Lode angle. Several teams made note of this behavior, pointing out that use of a von Mises yield locus (which has no dependence on Lode angle) resulted in a poor fit between the model response and the shear test data when the tensile test data was utilized to determine the model parameters. This is because the challenge material exhibited yielding under shear loading at a stress that was approximately 0.88 times lower than that predicted by a von Mises model (indicating that the yield surface for this alloy is closer to a Tresca like yield surface). To account for these response characteristics (anisotropy and Lode angle dependence) several teams (B, E, F, I, J, and M) made use of material models that are capable of capturing these dependencies. (Teams C and H accounted for some of these dependencies in an approximate way, using different sets of material properties/models in different regions to account for the expected material response characteristics.) Most of these teams (all, except B and C) made use of the Hill plasticity model, with its ability to capture anisotropy and/or Lode angle dependence of the yield locus. While teams that accounted for Lode Angle (J3) dependence, and/or sheet anisotropy generally produced better elastoplastic predictions, the Challenge may not have been able to sufficiently discriminate between relative importance of these two different contributions. Team I noted that the Hill yield function could be utilized to account for the Lode angle dependence of the yield locus without incorporation of material anisotropy, with acceptable results. Analyses by Team H and Team J concluded that an isotropic model would be more likely to predict failure along the incorrect path A–C–F, whereas the models with lode angle dependent yield loci were necessary to drive failure to the correct B–D–E–A path. The results suggest that models incorporating Lode angle dependence of the yield locus were able to predict the elasto-plastic response more accurately (perhaps prior to the onset of localization). Predictions of loads and CODs by teams using the von Mises yield criterion (which has no lode angle dependence) differed by about 10 %. Several teams also incorporated anisotropy in addition to Lode angle dependence of the yield locus. Based on the QoIs requested, it is difficult to ascertain if the inclusion of anisotropy for the challenge problem was truly necessary; however, post-challenge assessments carried out by one team suggest that alternate, more intrusive QoIs (such as inter-ligament strains) are more sensitive to the incorporation of anisotropy and if used may have resulted in a more conclusive determination of the importance of anisotropy (Gross and Ravi-Chandar 2016).

Work-hardening Calibration and extrapolation of the hardening behavior was typically handled either by fitting specific functional forms or by a spline/multi-linear approach. Models that represent the stress–strain curve through splines or other piecewise functions provide greater flexibility. On the other hand, functional forms of work hardening such as the Swift and Voce forms are perhaps cheaper computationally because they reduce the number of simulations required for calibration and uncertainty quantification. While the additional flexibility associated with the added parameters in a spline or piecewise-linear approach may at first seem more accommodating, the alternative functional forms were generally more successful in the elastoplastic regime. In fact, among the teams that had the most accurate quantitative predictions for the elastoplastic regime (Teams E, H, I, J), none of them used a spline or piecewise-linear approach. In discussion, one team noted that the flexibility of the extra degrees of freedom in a spline or piecewise linear approach can be challenging to calibrate given limited data, can be more difficult to assess in terms of uncertainty quantification, and can also be difficult to incorporate into coupled physics models such as a thermomechanical coupling.

Thermal effects This was crucial for this class of materials, especially under the moderate rate loading conditions, where the conversion of plastic work to heat, as well as heat conduction play a role in the local response. Load versus COD curves in the fast-rate case indicated a rounded response at the peak in the force-displacement curve rather than being flat-topped as in the slow-rate case. Some teams that incorporated the thermo-mechanical coupling (e.g. Teams G, H, and M) or adiabatic conditions (Team J) were able to better capture this feature at the faster rate. Several teams reported ambiguity in determining the appropriate thermal work coupling parameter, i.e. Taylor–Quinney coefficient.

Localization The experiments indicated a clear peak-load and geometric softening associated with localization of the structural response. The localization was subtle in the slow-rate force-COD curves and more pronounced at the fast-rate. Most teams predicted some degree of localization at least in a qualitative sense. This is not too surprising, because the onset of localization is essentially a geometric effect due to inter-ligament necking and a sufficiently fine mesh should capture this feature. The fidelity of models for work hardening and thermal softening will impact the accurate prediction of the onset of necking, and this is a potential source of discrepancy. It bears emphasis that the Sandia tests provide information about the onset and process of localization in both the tensile and shear testing that was carried out, but the lack of full-field measurements of strain in the challenge sample prevent further validation of the model with regard to onset and progress of localization. Follow-up experiments at the University of Texas at Austin provided this detailed full-field ligament deformation data (Gross and Ravi-Chandar 2016).

Crack initiation and propagation The prediction QoI metrics for this challenge focused on elastoplasticity and the conditions for initial unstable fracture from a smooth notch. When the crack did initiate, it was overdriven and propagated catastrophically to the next arrest feature. The elements of this challenge could be successfully navigated without wrestling with resistance curve behavior or incorporating a crack length scale and the related mesh dependence of sharp crack behavior. For this reason, the current challenge does not delve into the relative merits of different crack propagation modeling approaches. Most teams had marked success in predicting the correct propagation path and in predicting the unstable rupture. Incorrect predictions may be attributed to inadequate yield surface, work hardening response or boundary conditions. The adopted crack initiation models fall into three categories: a threshold strain value, voids, or damage. In the two instances of the cohesive zone and the XFEM-based XSHELL model, cracks and their location are assumed at the onset of the computation so initiation is not a consideration. Crack propagation was modeled using element deletion, XFEM, and the cohesive zone approaches. Based on the load-COD predictions, there are discrepancies in the onset and propagation of cracks. A key source of the discrepancy is the translation of model parameters from the tension and shear tests. For most mesh-based methods there is difficulty scaling the mesh size commensurately for the calibration and challenge geometries so that the calibration parameters translate appropriately. Cohesive zone and the XFEM approaches seek to bypass these difficulties. At the post-challenge workshop, there was considerable discussion on the tradeoffs between relatively simple models for failure that may lack fidelity or universality versus more sophisticated models that may be more challenging to calibrate or are less mature.

Numerical methods The finite element method was the method used by all of the teams, except Team K who used the Reproducing Kernel Particle Method. Meshes with fine zones in regions of potential crack propagation in the challenge sample and meshes with similar densities to model the calibration tests were employed with the expectation that mesh-dependence would be mitigated. Only two teams that adopted the XFEM and a non-local approach tried to mitigate the dependence of results on the mesh characteristics that is expected in failure modelling. This remains a source of discrepancy and is suggested as an item for improvement for blind predictions in future challenges. A second aspect is the time integration scheme. With the exception of four teams, all other teams used explicit time integration; mass-scaling was used to reduce computational time. The degree of discretization varied widely, with the in-plane element sizes spanning an order of magnitude from 0.05–0.5 mm, and the number of degrees of freedom varying by more than two orders of magnitude from 30 K to \(>\)3 M. While a detailed sensitivity study would be needed to fully address the discretization tradeoff for a given method, there was not an immediately clear general trend that more elements and higher spatial discretization consistently improved accuracy. The tradeoff between computational cost and prediction accuracy is specific to the individual method employed. It is not trivial to achieve Einstein’s complexity balance that “everything should be made as simple as possible, but not simpler”.

Post processing An often undiscussed source of discrepancy is the process of extracting the correct quantities, translating them into desired units, and communicating them correctly. This can be particularly problematic with a looming deadline, as the post-processing occurs in haste and rudimentary mistakes are made. In a previous challenge for example, one team reported QoIs in incorrect units. In the current challenge, Team L accidentally accounted for reaction forces at only one boundary node rather than summing the forces on all boundary nodes. This mistake was not discovered until after the comparison to the experimental predictions. While the team requested to report the corrected summed values, we have also included their original mistake for the purpose of illustrating this point. Even the most accurate model can be rendered useless if these mistakes are not painstakingly avoided. In critical applications where the model has implications such as loss of life, it is recommended to utilize at least two independent prediction teams to provide a peer review or cross-check for glaring discrepancies.

6.1.2 Overview of agreement between predictions and experiments

It is possible to assess the efficacy of each team’s prediction methodology comparison to the experimental outcome. The prescribed QoI’s that were readily measured are the metrics associated with elasticity, plasticity, work hardening, and the onset of necking (peak force). With respect to the metrics associated with plastic deformation up to peak force, half of the teams (E, G, H, I, J, M, N) were able to successfully predict all prescribed quantitative metrics within the 10 % buffered experimental range. This result reinforces the notion that the elastoplastic behavior is not trivial to correctly capture. In fact, all seven teams that did not capture the peak force, were also outside the buffered experimental range in their early deformation prediction at COD1 \(= 1\) mm. Six of those seven teams overpredicted the early deformation and subsequently overpredicted the peak force of the test. As discussed previously, one of the most common culprits for this overprediction was inadequate pin-loading boundary conditions.

Of the seven teams that predicted the elastoplastic response, five (E, H, I, J, M) were able to also predict the B–D–E–A crack path for both loading rates. Based on these prescribed QoI’s that were experimentally measured, all five teams could claim success in the fracture challenge. However, there were additional post-Challenge QoI’s that shed additional light on the ability to predict the onset of cracking. Of the five teams that successfully predicted all the deformation and crack path metrics, four of the teams (E, H, I, J) also predicted the forces associated with crack advance. All four of these teams overpredicted the COD1 value for cracking for at least one of the two loading rates. This highlights a general tendency for overpredicting the COD1 value for cracking: of the eight teams that predicted unstable B–D–E–A cracking, seven overpredicted the COD1 value of cracking for at least one of the loading rates. This outcome suggests the need for a more comprehensive, accurate suite of failure calibration tests.

6.1.3 Uncertainty bounds

To this point, the assessment has focused largely on a comparison of the predicted ‘expected’ values for the quantities of interest. However, each team was also allowed to report uncertainty bounds for their prediction. The use of uncertainty bounds is an important engineering tool to represent the potential sources of error in the prediction so that they do not result in misleading engineering interpretation.

The uncertainty bound also plays a psychological role in conveying the degree of confidence in a prediction. For example, reporting an uncertainty bound that varies by a factor of 10 from the lower to upper bound helps the user of the data understand that the result is only an order of magnitude estimate, whereas reporting a uncertainty bound that only differs by 1 % from the lower to upper bound suggests a much higher degree of confidence in the prediction. When teams reported uncertainty bounds, they typically ranged from \(\sim \)5–20 % variation on the expected value, however there were cases where the uncertainty bounds were \(\sim \)1 % and other cases where the uncertainty bounds were quite large. Team B, for example, predicted an expected value for force at COD1 \(=2\) mm (fast rate) of 20,202 N, remarkably consistent with the experimental range of 20,230–20,650 N. However, for that same quantity, Team B bounded their prediction from 10,058 to 24,983 N, more than a factor of 2 different from the lower bound to the upper bound. From a practical perspective, such a broad uncertainty window may lead engineers to overcompensate for the low (underpredicted) allowable minimum force.

It is possible to assess the efficacy of the predicted uncertainty bounds in their ability to bracket the experimental outcomes. It is only possible to make this assessment on the pre-fracture QoIs associated with plasticity and the onset of necking (COD1 \(=1\) mm, COD1 \(=2\) mm, and Peak Force), since the prescribed post-fracture QoIs were not measured experimentally. There were 14 teams that reported prediction on these 3 QoIs at each of the 2 different loading rates, resulting in a product of 84 predicted outcomes. Of these 84 predictions, the predicted ‘expected value’ fell within the experimentally observed range in only 17 (20 %) of the 84. Of these 84 predictions, 69 were reported with uncertainty bounds and the remaining 15 predictions were reported as expected values with no declaration of an uncertainty bound, presumably because the team ran out of time to assess the bounds. Of these 69 reported values with uncertainty bounds, there were 16 cases where the expected value was outside the range of experimental values, but the bounds successfully overlapped, at least partially, with the experimental range. To put this in perspective, while 20 % of the 84 predictions had expected values that fell within the experimental range; another 19 % (16/84) of the predictions had employed bounds that included some portion of the experimental range. The positive conclusion is that the use of uncertainty bounds roughly doubles the chances of capturing the outcome. Conversely, the negative conclusion is that \(77\,\% ((69{-}16)/69)\) of the time that uncertainty bounds were employed, they still did not bracket the experimental outcome.

This rather weak performance for uncertainty bounds is likely attributed to the time consuming nature of a formal detailed Uncertainty Quantification (UQ) analysis. Most teams instead bound their predictions by identifying one or two key parameter(s) that were difficult to calibrate, and varying them over some range. Based on the outcome of the previous challenge, a few teams intentionally varied the dimensions of the geometry across the range of values in the manufacturing tolerances. Even this degree of rudimentary parametric analysis can be much more time consuming than the baseline prediction, since it requires running several instantiations. There is also a tradeoff between the fidelity of the baseline prediction, and the feasibility of detailed UQ analysis. For example, the fine-zoned meshes used in some cases to model the challenge sample preclude implementation of UQ in a timely manner. As with the previous challenge, integration between solid mechanics modeling and UQ has not matured to the point where it is readily accessed for time-sensitive, resource-limited predictions. Beyond the time-consuming nature of UQ, there is a lack of standardized processes for the assessment.

6.1.4 Computational efficiency

There is generally a trade-off between fidelity and speed to solution, known in cognitive psychology as the speed-accuracy tradeoff (Wickelgren 1977). In engineering practice, it is often viewed that a reasonable approximation within a short timeframe is more valuable than a highly accurate estimate obtained in a much longer timeframe. The two Sandia Fracture Challenges both provided only the most basic of constraints in this regard: all teams had the same number of months to arrive at their prediction. Yet the number of man-hours each team spent in arriving at their predictions likely varied widely, although that number may be difficult to estimate.